-

Advances in iOS Photography

People love to take pictures with iPhone. In fact, it's the most popular camera in the world, and photography apps empower this experience. Explore new AVFoundation Capture APIs which allow for the capture of Live Photos, RAW image data from the camera, and wide color photos.

リソース

関連ビデオ

WWDC21

WWDC16

-

このビデオを検索

Good morning.

Morning everyone and welcome to session 501.

I'm Brad Ford. I work on the Core Media and AV Foundation Capture Teams at Apple.

And this session is all about the iOS camera. Hopefully you've figured that out by now. This is the most popular camera in the world. And it's also about photography. If you develop a photography app, or even just thinking about developing a photography app, then this is a very good OS for you. I think you and iOS 10 are about to become fast friends.

Today we'll be focusing on the AV Foundation framework, which is our lowest level and most powerful framework for accessing the camera. AV Foundation is broad and deep. If you're new to camera capture on iOS, I invite you to review our past WWC camera presentation videos listed here.

They give you a good base for today's presentation and plus, you get to watch me age gracefully. Here's what we're going to do for the next 58 minutes.

I'll present a brand new AVCaptureOutput for capturing photographic content, and then we're going to focus on four feature areas. We're going to focus on Live Photos. You'll learn how to capture Live Photos in your app, just like Apple's camera app.

You'll learn how to capture bare RAW images and store them to DNG files, which is a first on our platform in iOS.

You'll learn about how to get preview or thumbnail images along with your regular photo captures for a more responsive UI.

And lastly, you'll learn how to capture gorgeous, vivid images in wide color. Let's get started.

Here's a quick refresher on how AV Foundation's capture classes work. At the center of our capture universe is the AVCaptureSession. This is the object you tell it to start or stop running.

In order to do anything useful, though, it needs some inputs. Inputs like a camera or a microphone. And they provide data to the session. And it also needs outputs to receive the data, such as a StillImageOutput which can capture still images or a QuickTime movie file output, which records QuickTime movies.

There are also connections, and these are represented in the API as AVCaptureConnections. That's our overall object graph. You've kind of seen how we all put things together.

All of these features, I just mentioned, relate to taking still images. So we might expect that we'd be spending a lot of time in the AVCaptureStillImageOutput today but you'd be wrong.

Today we're introducing a brand new CaptureOutput in iOS 10. And it's called the AVCapturePhotoOutput, emphasizing the fact that our photos are much more than static still images now.

AVCapturePhotoOutput addresses AVStillImageOutput's design challenges in four main areas.

It features a functional programming model. There are clear delineations between mutable and immutable data.

We've encapsulated photo settings into a distinct object unto itself.

And the PhotoOutput can track your photo's progress from request to completion through a delegate-style interface of callbacks. And lastly, it resolves your indeterminate photo settings early in the capture process, so you know what you're going to be getting. Let's talk a little bit more about that last feature there.

Here's what an AVCapturePhotoOutput looks like. Even with all its new features, it's a very thin interface, smaller even than AVCaptureStillImageOutput. It has a small set of read-only properties that tell you whether particular features are supported, such as is LivePhotoCaptureSupported? It has a smaller set of writable properties that let you opt in for particular features when supported. Some capture features affect how the capture render pipeline is built, so you have to specify them upfront. One such is HighResolutionCapture. If you ever intend to capture high-resolution photos, such as five-megapixel selfies on the iPhone 6s, you have to opt-in for the feature first before calling startRunning on the AVCapture Session.

Lastly, there's a single method that you can call to kick off a photo capture.

Just one verb. That's it.

Now you're probably asking yourself, well what happened to all the per photo state? How do I request the flash capture? How do I get BGRA? How do I get still image stabilization? These features and others have moved to a new object called AVCapturePhotoSettings. This object contains all the settings that pertain to one single photo capture request. Think of it like the list of options to choose from when you're buying a MAC on the Apple online store. You fill out the online form with all the features that you want and then you hit the place order button. And placing the order is like calling capturePhoto, passing the AVCapturePhotoSettings as your first parameter.

Now when you place an order online, the store needs your email address to communicate with you about your order.

Within AVCapturePhotoOutput's world, the email address you provide is an object conforming to AVCapturePhotoCaptureDelegate protocol.

This delegate gets called back as events related to your photo capture occur.

This object gets passed as your second parameter to CapturePhoto.

Okay, so what's good about AVCapturePhotoSettings? First of all, they are atomic. All settings are encapsulated in a single object. There's no potential for settings getting out of sync because they are not properties of the AVCapturePhotoOutput, but rather, a per-settings object.

They're unique.

Each photo settings instance has a unique ID property. You're only allowed to use one photo settings once and never again. So you'll receive exactly one set of results for each photo capture request.

After requesting a photo capture with a set of settings, you can hold onto it and validate results against it as they're returned to you. Sort of like making a copy of your order form, your online order form.

So then, what's good about the photo delegates? Well, it's a single set of callbacks. Again, per photo settings.

The ordering is documented. You know exactly which callbacks you're going to get and at what time and in what order.

And it's a vehicle for resolving indeterminate settings. That one I think I need to explain a little bit more.

Let's say your app requests the photo right here on this timeline. You've specified photo settings with auto flash and auto still image stabilization. I shortened still image stabilization to SIS so it would fit on the slide better. You're telling the PhotoOutput I want you to use flash or SIS but only if you need to, only if they're appropriate for the scene.

So very soon after you make the request, the PhotoOutput calls your delegates first callback, which is willBegin CaptureFor ResolvedSettings.

This callback is always, always, always called first. It's sort of like the courtesy email you get from Apple saying we've received your order. Here's what we'll be sending you.

The callback passes you an instance of a new object called AVCapturePhotoResolvedSettings.

It's just like the photo settings you filled out, except now everything is resolved.

They have the same unique ID. Your unresolved version and resolved version share a unique ID, so you compare them together. It also tells you what features the photo output picked for you. So notice, in this case, flash has been resolved to on and SIS has been resolved to off. So clearly we're in a very low light situation such as this conference room. Next comes willCapture PhotoFor ResolvedSettings. It's delivered right when the photo is being taken, or like when the virtual camera shudder is closing and the shudder sound is being played. If you want to perform some sort of a shudder animation, this is the appropriate time to do it.

And then shortly thereafter comes didCapture PhotoFor ResolvedSettings, just after the image has been fully exposed and read out, and the virtual shudder opens.

Then some time has to pass because the image is being processed, applying all the features that you asked for.

And when the photo is finally ready, you get the didProcessingPhotoSampleBuffer callback, along with an ImageSampleBuffer you've been waiting for. So, yay. It's like getting the shiny new MAC on your doorstep.

And finally, you get the didFinish CaptureFor ResolvedSettings callback, which is guaranteed to be delivered last.

It's like the follow-up email that you get from Apple saying all your packages have been delivered. A pleasure doing business with you over and out.

This is a good time to clean up any of your per-photo storage.

So let's talk about those delegates in specifics. The callbacks track a single photo capture request.

The photo output holds a weak reference to your delegate, so it will not keep that object alive for you. Remember to keep a strong reference to it in your code.

All the callbacks in this protocol are marked optional, but some of them become required at runtime, depending on your photo settings. For instance, when you're capturing a compressed run compressed photo, your delegate has to implement the one callback where you get the photo. Otherwise, we would have nowhere to deliver it.

The rules are clearly spelled out in the AVCapturePhotoOutput.h headerDoc.

All callbacks pass an instance of that nice ResolvedPhotoSettingsObject I talked to you about. So you always know what you're about to get or what you just got.

So speaking of settings, let's look at some code showing how to initiate photo captures with various AVCapturePhotoSettings features.

Okay, the first one, takeHighResolutionPhoto, as I said before, the front facing camera on iPhone 6s supports five-megapixel high resolution selfies, but it can't stream at five megapixels. It can only do individual high res stills.

So you have to create a PhotoSettingsObject, opting in for the HighResolution Photo CaptureEnabled. This gives you the default constructor, AVCapturePhotoSettings, with friends.

And then, by default, it sets the output format to JPEG and opts you in for still image stabilization.

I then set isHighResolutionPhotoEnabled to true and then call CapturePhoto.

In the second example, takeFlashPhoto. Notice that the flashMode is now a property of the settings object. If you've worked with StillImageOutput in the past, you'll know that Flash was part of the AVCapture device, so we had a problem there that you had to access two different objects in order to set settings. Here, it's all part of one single atomic object. Nice. The final sample here uses a more complex constructor of AVCapturePhotoSettings. This time we're going to pass the output format that we want. In this case, we want an uncompressed BGRA format. So we make a dictionary of CV pixel buffer attributes and then pass it as the parameter AVCapturePhotoSettings, and we're good to go.

Now when you call capturePhoto, AVCapturePhotoOutput will validate your settings and make sure you haven't asked for crazy stuff. It'll ensure self-consistency, and it'll ensure that the stuff that you've asked for is actually supported. And if it's not, it will throw an exception.

Result settings, as you might expect, are entirely immutable. All the properties are read-only. They're purely for your information. Again, this is the functional programming immutable part.

It has a unique ID that you compare with the unresolved settings object that you have. This is kind of a nice feature. It tells you the dimensions of the photo you're going to get before you get it. So you can plan, do some allocations, whatever you need to do.

It tells you whether it was resolved to flash on or off. And still image stabilization on or off.

It also supports bracketed capture. It's a specialized type of capture where you request a number of images, sometimes with differing exposure values. This might be done, for instance, if you wanted to fuse differently exposed images together to produce an effect such as an HDR effect. I spoke at length about these kinds of captures in 2014 Session 508.

Go check that video out for a refresher.

As with AVCaptureStillImageOutput, we support auto exposure brackets and custom exposure brackets.

But the new way to request a bracketed capture is to instantiate an AVCapturePhotoBracketSettings. So it's like the photo settings but it's a subclass, and it has the extra stuff that you would need for doing a bracketed capture.

When you create one of these you specify an array of AVCapture BracketedStill ImageSettings. This is an existing object from the AVCaptureStillImageOutput days.

You specify one of these per exposure. For instance, -2EV, +2EV, 0EV.

Also, if you're on an iPhone 6+ or 6s+, you can optionally enable lens stabilization using the isLensStabilizationEnabled property. So you recall the timeline I just showed you on the previous slide, where the photo was delivered to didFinish ProcessingPhoto SampleBuffer callback. When you request a bracket of say three images, that callback is going to be called three times. Once per image. And the fifth parameter tells you which particular bracket settings in this image request this corresponds to.

Okay. So we like the new AVCapturePhotoOutput so much that we want you to move over to it right away. And so we're deprecating in iOS 10 the AVCaptureStillImageOutput and all of the flash-related properties of AVCaptureDevice, and instead, this is what you should use. Like I said, there are parts of flash capture that are part and parcel to the photo settings and so, it's a much better programming interface. Move over as soon as you can.

The last item is -- let's talk about the photo benefits before we move on.

They're good for easier bookkeeping.

Immediate settings resolution.

Confident request tracking. And it's good for Apple. It's good for us because it's an expandable palette of callbacks for us. We can add new ways to call you back in the future and that last little bit is important to the next feature that I'm going to talk about, which is Live Photos. So Apple.com has a great little blurb on Live Photos and what they are. It says, "A still photo captures an instant frozen in time.

With Live Photos, you can turn those instants into unforgettable living memories." The beautiful thing about Live Photos is that they appreciate in value the further you get from the memory.

So, in this picture, this is a great still image in and of itself.

Huge disgusting sand crabs my nephew dug up on the beach. A great photo. But if I 3D touch it -- Okay. So now I remember. It was a freezing day. He'd never been in the ocean before and his lips were blue. He'd been in for too long and his hands were shaking. And I also hear my brother's voice speaking at the beginning. So all of these things aid in memory recall because I have more senses being activated.

Then there are people finding inventive ways to use Live Photos as an artistic medium unto itself. This shot is a twist on the selfie. Our camera products team calls this The Doughnut Selfie. A high degree of difficulty to do it well. Also popular is the spinning swivel chair selfie with Live Photo. Try that one out.

I'm a big fan of the surprise reveal live photo, but unfortunately, my kids are too.

A three-second window is just way too tempting for my natural-borne photobombers. So Live Photos began life as a thought experiment from Apple's design studio. The premise was, even though we've got these remarkable screens now for sharing and viewing content, the photo experience itself has remained static for 150 years.

JPEGs that we swipe through on the screen are just digital versions of the chemicals on paper that we leaf through in our shoeboxes. And yet, it's the primary way that people store their memories. So isn't there something better that we can do? And after a lot of experimentation and prototyping, we converged on what this new media experience is.

A moment or a memory. Well, first of all and foremost it is a still photo. It's still as good quality as before. It's a 12-megapixel JPEG full resolution still image, and it has the same quality as non-Live Photos. Let me emphasize that again. Live Photos get all the great secret sauce that Apple's non-Live Photos do, so you are not sacrificing anything by turning it on. Also a big deal was the idea of frictionless capture. That means there's nothing new to learn. You take photos the same way you always have. Still the same spontaneous frame the shot, push a button, nothing additional to think about.

A Live Photo is also a memory, though. It has to engage more senses than the static image. It has to aid in memory recall.

So it's nominally a short movie, a three-second movie with 1.5 seconds coming before the still and 1.5 coming after the still, and we take it at about screen resolution or targeting 1080p.

And it includes audio.

And we're constantly improving on the design. In iOS 9.1, we added this great feature of automatically trimming Live Photos in case you did a sweeping movement towards your shoes or your pockets. So now we'll auto trim them and get rid of the parts that you don't want see in the movie.

New in iOS 10, we've made it even better. Now all of the Live Photo movies are stabilized.

Also new in iOS 10, interruption-free music during captures. So if you happen to be playing -- Yeah. That's a good one. I like that one, too.

So in order to be both a moment and a memory, a Live Photo consists of two assets, as you would expect. JPEG file, QuickTime Movie file. These two assets share a common UUID that uniquely pairs them together. The JPEG file's UUID is stored within the Apple Maker Note of the . And the movie asset is, like I said, nominally three seconds long, has a video track, roughly 1080p, with a forward by 3 aspect ratio.

It contains a timed metadata track with one single sample in it that corresponds to the exact time of the still photo within the movie's timeline.

It also contains a piece of top level movie metadata that pairs it with the JPEG's metadata and that's called the QuickTime content identifier. And its value is a UUID-style stream.

Okay. So what do you have to do to capture Live Photos? In AVCapturePhotoOutput, there is a property called isLivePhotoCaptureSupported? You have to make sure it's supported. It's not supported on all devices.

And currently it's only supported when you're using the preset photo.

You opt in for it using AVCapture PhotoOutput.isLive PhotoCaptureEnabled, setting it to true. You have to opt in for it before you start the session running. Otherwise, it will cause a disruptive reconfiguration of the session, and you don't want that. Also if you want audio in your Live Photo movies, you have to add an AVCaptureDeviceInput for the microphone. Very important. Don't forget to do that.

Also not supported is simultaneous recording of regular movies using AVCaptureMovieOutput and Live Photos at the same time. So if you have a movie file output in your session's topology, it will disable LivePhotoCapture. You configure a LivePhotoCapture the usual way.

It's got the default constructors you would expect, but additionally you specify a URL, a LivePhotoMovieFileURL. This is where you want us to write the movie to. And it has to be in your sandbox, and it has to be accessible to you. You're not required to specify any livePhotoMovieMetadata but you can if you'd like to. Here I gave an example of using the author metadata. And I set myself as the author, so that the world will know that it's my movie. But you could also do interesting stuff like add GPS tagging to your movie. So now let's talk about Live Photo-related delegate methods. Like I said, we have this expandable palette of delegate callbacks, and we're going to use it.

When capturing a Live Photo, your first callback lets you know that a Live Photo will be recorded, by telling you the movie's resolved dimensions. See that? Now, in addition to just the photo dimensions, you also know what dimensions the Live Photo is going to be. You receive the expected callbacks, including a JPEG being delivered to you in memory as before. But now we're going to give you some new ones.

A Live Photo movie is nominally three seconds with a still image right in the middle. So that means up to 1.5 seconds after your capture request, you're going to receive a new callback. And this one has a strange name, didFinishRecording LivePhotoMovieFor EventualFileAtURL.

Try to parse that. It means the file hasn't been written yet but all the samples that need to be collected for the movie are done being collected. In other words, if you have a Live Photo badge up in your UI, this is an appropriate time to take it down. Let people know that they don't need to hold still anymore. A good time to dismiss the Live Photo badge.

And soon after, the movie file will be finished being written. And you'll get the didFinishProcessing LivePhotoTo MovieFileAtURL. That is a required callback, if you're doing Live Photos. And now the movie's ready to be consumed.

Lastly you get the thumbs up, all done. We've delivered everything that we're going to.

So note that the JPEG portion of the LivePhotoCapture is delivered in the same way as static still photos. It comes as a sample buffer in memory, using didFinishing ProcessingPhoto SampleBuffer callback, as we've already seen. If you want to write this to disk, it's a trivial job.

We have a class method in AVCapturePhotoOutput for rewriting JPEGs as a Data, that's with a capital D, that's suitable for writing to a JPEG file on disk. And you can see it in action here. I'm going to gloss over the second parameter to that function, the previewPhotoSampleBuffer. We'll discuss it in a little while.

So here's a suggestion for you when you're doing Live Photos. LivePhotoCapture is an example of the kind of capture that delivers multiple assets. Sort of like a multi-order, where you're going to get the computer in one order, and you're going to get the dongle in another order. So when it delivers multiple assets, we have found it handy, in our own test apps that we've written, to instantiate a new AVCapturePhotoDelegate object for each photo request in this situation. So then, within that object, you can aggregate all of the things that you're getting. The sample buffer, the movie, et cetera, for this request. And then, there's a convenient place to dispose of that object when you get the thumbs up callback, saying that we're done. That's just a helpful tip there.

Once your assets have been written to disk, there are still several more steps that you need to take to get the full live photo experience. Though the video complement is a standard QuickTime movie, it's not meant to be played start to finish with an AV Player like you would a regular movie. There's a special recipe for playing it back. It's supposed to ease in and out of the photo still image time. When you swipe between them, these particular kinds of assets have a little bit of movement in the photos app.

So to get the full Live Photo playback experience, you need to use the photos and photos UI frameworks. And there are classes relating to Live Photo, to ingest your RAW assets into the photo library and properly play them back, for instance, with the LivePhotoView.

And new in iOS 10, photos framework lets you edit Live Photo content just as you would a still photo, and that's great news and I'd like to demo it.

Okay. So we have a bit of sample code here, the venerable AVCam, which has been out for five years, but now we have spruced it up, so that it has a specific photo mode and a movie mode. That's because you can only do Live Photos in photo mode. And notice it's got some badging at the top that tells you that Live Photo mode is on or off.

And you can switch cameras. I'm going to try to do the difficult doughnut selfie. Let's see how successful I am. So you have to start and then take it somewhere in the middle and then finish. So notice, while I was doing that, there was a live badge that came up, and that's using the callbacks that I talked to you about earlier.

So here it is. It was written to the Photos Library and -- then take it somewhere in the middle -- nice, right? But that's not all we can do with it now. In iOS 9, when you tried to edit a Live Photo, you would lose the movie portion of it. But now we can either, in the photos app natively or with code that you provide in your app, such as this little sample called LivePhotoEditor that I've included as a photo editing extension, I can apply a simple filter or trim the movie. This just does a really simple thing of applying a tonal filter, but notice it didn't get rid of the movie. I can still play it -- and then take it somewhere in the middle -- so, nice. You can now edit your Live Photos. All right. AVCam. Now, like I said, has separate video and photo recording modes, so you get the best photo experience. You get the best movie-making experience. And it shows the proper live badging technique that I was talking about. It also shows you how to write it to the Assets Library and that sample code is available right now. If you go to our sessions' page, you'll find it.

It was even Swiftified. If you want to know more about Live Photo editing, please come to session 505 on Thursday at 11. You'll hear all about it. Okay. We also support a feature called LivePhotoCaptureSuspension. Here's a quick example of when this might be useful. Let's say you have an app that takes pictures and makes obnoxious foghorn sounds.

Okay, just go with me on this one. It takes pictures. Makes obnoxious foghorn sounds. Now let's say that on a timeline, your user takes a Live Photo here and then they play an obnoxious foghorn sound here. And then after it's done playing they take another Live Photo there.

So this is a problem because since the movie portions of photos one and two overlap with the obnoxious foghorn sound, you have now ruined two Live Photo movies. You're going to hear the end of the foghorn in one of them and the beginning of the foghorn in the other. That's no good. So to cope with this problem, you can set isLivePhotoCaptureSuspended to true, just before you do your obnoxious thing.

And that will cause any Live Photos in progress to abruptly be trimmed right to that point. And you can do the same thing by setting isLivePhotoCaptureSuspended to false, and that will cause a nice clean break on the endpoint, so that no content earlier than that point will appear in your movies when you unsuspend. A nice little feature.

So let's talk about support. Where do we support LivePhotoCapture? We support it on all the recent iOS devices, and the easy way to remember it is every device that has a 12-megapixel camera, that's where we support Live Photos.

All right, onto our next major feature of the day and that's RAW Photo Capture. So to explain what RAW images are, I need to start with a very high level overview of how CMOS sensors work.

CMOS sensors collect photons of light through two-dimensional arrays of detectors.

The top layer of the array is called a color filter array and as light passes through from the top, it only allows one color component through, either red, green or blue, in a Bayer pattern.

Green is twice as prevalent in this little checkerboard here because our eyes are twice as sensitive to green light as they are to the other colors.

The bottom layer here is known as the sensor array.

Now what actually gets stored in a RAW file is the intensity of the amount of either red, green or blue light that hit the sensor through each of those detectors also needs to be stored that Bayer pattern. In other words, the arrangement of reds, greens and blues, so that later on it can be demosaiced. You have to store a lot of other metadata too about color information, exposure information.

And so RAW converters have a really hard job. A RAW converter that basically takes all of this stuff and turns it into an RGB image.

Demosaicing is just the tip of the iceberg. A lot of stuff needs to happen before it can be presented onscreen.

So to draw an analogy, storing a RAW file is a lot like storing the ingredients to bake a cake, okay? And then you have to carry the ingredients around with you wherever you go. It's kind of heavy. It's kind of awkward. It takes some time to bake it every time. If you ask two different bakers to bake the cake using the same ingredients, you might get a slightly different tasting cake. But there are also some huge advantages to RAW. First and foremost, you have bake-time flexibility, right? So you're carrying the ingredients around but you can make a better cake next year.

There's no compression involved like there would be in BGRA or 420. You have more bits to work with. It's a 10-bit sensor RAW packaged in 14 bits per pixel instead of eight.

Also, you have lots of headroom for editing.

And some greater artistic freedom to make different decisions in post. So basically you're just deferring the baking until later.

Okay? Now what's JPEG? RAW images offer many benefits but they're not the be-all-end-all of existence. It's important to understand that there are tradeoffs involved when you choose RAW and that JPEGs are still a very attractive option. JPEGs are the cake, the lovingly baked Apple cake, just for you, and it's a pretty good cake. It's got all of the Apple goodness in it.

Much faster rendering. You don't have to carry as many ingredients around. You also get some secret sauce, like stabilization. As I mentioned, we use multiple image fusion for stabilization. You can't get that with a single RAW image, no matter how good it is, because we're taking -- I guess it's kind of like a multilayer cake.

Okay? So yeah, you can't do that with a single image.

Also you get smaller file size.

So all of these things make JPEG a really attractive alternative and you should decide which one you want to use, which is better for your app.

We identify RAW formats using four-character codes, just like we do for regular pixel formats in the Core Video framework. We've added four new constants to CVPixelBuffer.h to describe the four different Bayer patterns that you'll encounter on our cameras, and they're listed there. Basically they describe the order of the reds, greens and blues in the checkerboard.

How do you capture RAW with AVCapturePhotoOutput? It's pretty simple.

RAW is only supported when using the photo format, the preset photo, same as Live Photo.

It's only supported on the rear camera.

And we do support RAW brackets, so you can take a bracket of three RAW images, for instance.

To request a RAW capture, you create an AVCapturePhotoSettings object but surprise, surprise, there's a different instructor. This one takes a RAW pixel format.

So how do you decide which RAW format you should ask it to deliver to you? Well you can ask the PhotoOutput itself. It'll tell you here are my available RAW photo pixel formats, and you can select one of those.

The RAW format you specify has to be supported by the hardware. Now also important is that in these RAW settings, SIS has no meaning because it's not a multiple image fusion scenario. So autoStillImage StabilizationEnabled has to be set to no or it will throw an exception. And also highResolutionPhotoEnabled is meaningless because you're just getting the sense of RAW, so it also must be set to false.

There's a separate delegate callback for RAW photos called didFinish ProcessingRAW PhotoSampleBuffer. And if you are really sharp-eyed and really fast, you'll notice that it has exactly the same parameters as the previous callback, where you get the regular kind of image, the didFinish ProcessingRAW PhotoSampleBuffer callback. So now you might ask yourself why did we bother making a whole new delegate callback for RAW sample buffers if it has the same exact parameters as the other one? There's a good reason, and that reason is RAW plus processed image support. So we do support, just like on DSLR cameras, mirrorless cameras, a workflow where you can get both RAW and JPEG simultaneously. That's what I mean by processed image.

The ability to shoot RAW and JPEG is kind of a professional feature, kind of a big deal. So you can get RAW plus a processed image. It doesn't have to be a JPEG, it could be BGRA, 420.

The processed image is delivered to the other callback, the didFinish ProcessingPhoto SampleBuffer callback, and the RAW is delivered to the one with RAW in the name. RAW plus processed brackets are supported, so see if you can wrap your head around that. That would be -- I'm doing a bracket and I'm asking for RAW plus JPEG. So if I'm doing a bracket of three, I'm going to get three RAWs and three JPEGs. RAW plus still image stabilization, though, is not supported.

Okay, so to capture RAW plus JPEG, as you might expect, there's yet another constructor of AVCapturePhotoSettings. In this one, you specify both the RAW pixel format and the processed format that you want.

Here I'm choosing JPEG as the output format and a RAW format.

Now when you select JPEGPlusRAW, HighResolutionPhotoEnabled does mean something. Because now it's applying to the JPEG.

All right. Let's talk about storing RAW buffers. They're not that useful if all you can do is work with them in memory.

So rather than introduce an Apple proprietary RAW file format, like so many other camera vendors do, we've elected to use Adobe's digital negative format for storage.

DNG is a standard way of just storing bits and metadata. It doesn't imply a file format in any other way. So going back to our cake-baking analogy, a DNG is just like a standard box for holding ingredients.

It's still up to individual RAW converters to decide how to interpret those ingredients. So DNGs opened by one third party app might look different than DNGs opened in a different app. So storing in DNG is pretty trivial.

You just call the class function dngPhotoDataRepresentation, passing the RAW buffer you received in the delegate callback.

This creates a capital D Data in memory that can be written to file.

And this API always writes compressed DNG files to save space.

All right. I feel a demo coming on.

Okay. So for RAW capture, we've updated another venerable piece of sample code and that's AVCamManual. We released this one in 2014, when we showed off our manual control APIs. So it lets you choose focus, exposure, white balance, and you can manually or auto control those. And then there's a new thing in the HUD on the left side that lets you select either RAW off or on.

So you can choose to shoot RAW photos in this app.

Let's go to exposure. Let me see if I can purposely overexpose a little bit, and then I'll take a photo. And now I'm going to leave the app.

I'm going to go to an app called RAWExpose. Now this was not written by the AV Foundation Team. This was written by the Core Image Team, but they graciously let me borrow it for my demo. And we'll go down and we can see the picture that we just took.

Now this one is a RAW. It's reading the DNG file. And we can do things with it that we could never do with the JPEGs, like we restore the EV to a more same value. We can adjust the temperature and tint. All of these things are being done in post and are completely reversible. You can also look and see what it looks like with or without noise reduction. So all of these are part of a new Core image API for editing RAW. Okay, let's go back to slides.

The AVCamManual sample code is available right now. You can go get it. It's associated with this session's slides.

And also, if you want to learn more about RAW editing, you need to come to that same session as I talked about before, session 505, where they talk about both of these. The second part is RAW Processing with Core Image. It's a great session. Where is RAW photo capture supported? By happy coincidence, it's exactly the same products as where we support Live Photos. So anything with a 12-megapixel camera is where you can do RAW photos.

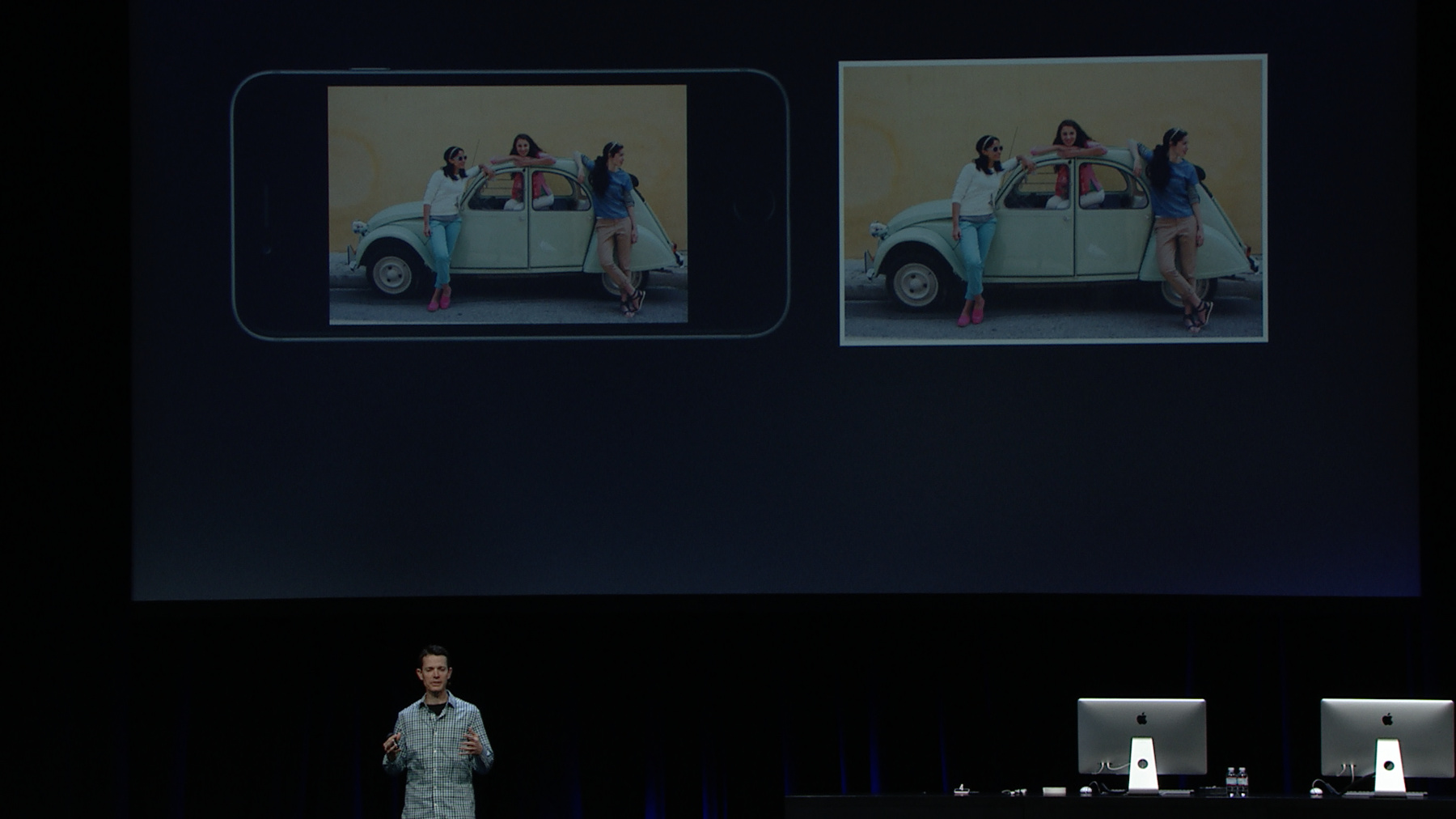

Onto our next topic, which is capturing preview images, also known as thumbnails. Photography apps commonly take pictures and want to quickly show a preview of the results, such as Apple Zone camera app.

So take a look in the bottom left while this is playing.

And you see, as soon as it hits the shutter button, almost instantaneously you have a preview in the image well on the bottom left. That's good. That's comforting to your users to know that what they did just worked.

It gives them instant feedback.

Also a number of image processing algorithms such as Core Images, CI Rectangle Detector or CI QR Code Detector work better with smaller images, smaller uncompressed images. They don't need the full 12-megapixel JPEG to find faces.

Unfortunately there is an inherit impedance mismatch here.

You request a high-quality JPEG because that's what you want to store on disk. That's what you want to survive, but you also want to get a preview on screen really fast.

So if you have to do that work yourself, you're decompressing the JPEG. You're downscaling it. And finally displaying it.

All of this takes time and buffer copies and added complexity.

Nicer would be to get both the high-quality JPEG for storage and if the camera could give you a smaller version of it, directly from the camera, not decompressed from the JPEG.

Then you could skip all those steps and go straight to display with the preview image.

And this is exactly the workflow that we support in AVCapturePhotoOutput.

The delegate -- I'll pander. I'll pander. The delegate callback can deliver a preview image along with the processed or RAW image.

The preview is uncompressed, so it's 420fv or BGRA, you're choice. If you know the size you want, you can specify the dimensions that you want. Or if you're not sure what a good preview size would be for this current platform, the PhotoOutput can pick a good default size for you.

Here's some sample code showing how to request a preview image. After creating a photo settings instance in one of the usual ways, you can select a previewPixelType. Again, the photo settings themselves can tell you which formats are available, and they are sorted so that the most optimal one is first. So here, I'm getting the very first one from the array.

And when I say optimal, I mean the one that requires the fewest conversions from the native camera.

You create a CVPixelBuffer attributes dictionary with that format type key, and that first part is required. So if you want preview images, you have to at least specify the format that you want.

Optionally, you can also specify a width and a height, if you want to custom size. And you don't need to know exactly the aspect ratio of the image that you're getting. Here I just specified 160 by 160. I don't really expect to get a box out, but I'm using those as the max for both width and height. And AVCapturePhotoOutput will do the job of resizing the preview image so that it fits in the box, preserving aspect ratio.

Retrieving preview images is also very straightforward. Here we've requested a JPEG plus a preview image at 160 by 160.

So when we get our first callback saying we've received your order, you get a willBegin CaptureFor ResolvedSettings and a ResolvedPhotoSettings object which, if you notice, the previewPhotoDimensions are not 160 by 160. They're 160 by 120 because it's been resolved to something that's aspect ratio appropriate for the 12-megapixel photo that you want. When the didFinish ProcessingPhoto SampleBuffer callback finally comes, you get not one but two images. The full-sized JPEG is the first parameter, and the previewPhotoSampleBuffer is the second. So if you're following along here and adding things up in your mind. If you do a RAW plus bracket plus JPEG plus preview image, then you're going to get mRAWs, mJPEGs and mpreview images.

Another great use of the preview image is as an embedded thumbnail in your high-quality JPEG or DNG files.

In this code sample, I'm using the previewPhotoSampleBuffer parameter of my didFinish ProcessingRAW PhotoSampleBuffer callback as an embedded thumbnail to the DNG file.

So when I call PhotoOutput's dngPhotoDataRepresentation, I'm passing that as the second parameter.

You should always do this, okay? Embedding a thumbnail image is always a good idea because you don't know where it's going to be viewed. Some apps can handle looking at the DNG bits, the RAW bits, and some can't. But if you have an embedded thumbnail in there, everyone's going to be able to look at something. You definitely want to do it if you're adding a DNG to the Photo Library so that it can give you a nice quick preview.

Preview image delivery is supported everywhere.

All right, onto the last topic of the day, which is wide color. And as you might suspect, it's a wide topic.

You've no doubt heard about the beautiful new true toned display on our iPad Pro 9.7 inch.

It's a wide-gamut display and it's on par with the 4K and 5K iMax. It's capable of displaying strikingly vivid reds and yellows and deeply saturated cyans and greens.

To take advantage of the display's extended color range, we introduced color management for the first time in iOS 9.3. I'm not sure if you were aware of that, but we're now color managed for the iPad Pro 9.7.

And with displays this pretty, it only makes sense to also capture photos with equally wide color so that we enhance the viewing experience. And so that when you look at those several years from now, you've got more color information.

Beginning in iOS 10, photo captures on the iPad Pro 9.7 will magically become wide color captures.

Let me give you a brief overview of what wide color means, wide color terminology, starting with the concept of a color space.

A color space describes an environment in which colors are represented, ordered, compared, or computed. And the most common color space used in computer displays is sRGB. The s stands for standards, so standard RGB.

It's based on an international spec ITU 709.

It has a gamma of roughly 2.2 and a white point of 6500 degrees Kelvin. sRGB does a really good job of representing many common colors, like faces, sky, grass, but there are many colors that sRGB does not reproduce very well. For instance, more than 40% of pro football jerseys are outside of the sRBG gamut.

Who knew? The iPad Pro 9.7 supports wide color using a new color space that we call Display P3. It's similar to the SMPTE standard DCI P3. That's a color space that's used in digital cinema projectors. The color primaries are the same as DCI P3, but then it differs in gamma and white point.

The gamma and white point are identical to sRGBs.

Why would we do that? The reason for that is that the DCI P3 white point is slanted toward the green side. It was chosen to maximize brightness in dark home theater situations and we found that with the white point at 6500, we get a more compatible superset of the sRGB standard. So here on this slide you can see, in gray, the sRGB and then you can see, super-imposed around it, the Display P3. And it does a nice job of kind of broadly covering the superset of sRGB. And that's why we chose it. Using Apple's color sync utility on OS 10, you can see a visual representation of Display P3. So I took a little screen capture here to show you. You can compare it with sRGB in three dimensions. So here I'm selecting Display P3, and then I do the hold for comparison thing. That's a neat trick. And then I select sRGB. And then I see the one super-imposed on top of the other, so you can see sRGB inside and Display P3 on the outside, and you get a feel for just how wide the Display P3 is compared to sRGB. And the range of representable colors is visibly bigger.

So now let's get down to the nuts and bolts of capturing Display P3 content. For highest fidelity, the color space of capture content has to be determined at the source. That's not something that can flow down in sRGB and then be up-converted to the wide. It has to start wide.

So, as you might expect, the color space is fundamentally a property of the AVCaptureDevice, the source.

So we're going to spend some time talking about the AVCaptureDevice and we're also going to talk about the AVCaptureSession. The session is where automatic wide color selection can be determined for the whole session configuration as a whole. Okay. AVCaptureDevice is how AV Foundation represents a camera or a mic.

Each AVCaptureDevice has a format property. Formats is an array of AVCaptureDevice formats. They are objects themselves, and they represent the formats that the device can capture in.

They come in pairs, as you see here. For each resolution and frame rate, there's a 402v version and a 420f. That stands for v for video range, 16 to 235 or f for full range, the 0 to 255.

So new in iOS 10, AVCaptureDevice formats have a new supported color spaces property.

It's an array of numbers with the possible values of 0 for sRGB or 1 for P3 D65.

We refer to it as Display P3 but in the API, it's referred to as P3 D65, the d standing for display and 65 for the 6500 Kelvin white point. On an iPad Pro 9.7, the 420v formats only support sRGB.

But the full-range 420f formats support either sRGB or Display P3. The device has a settable active format property. That's not new.

So that one of the formats in the list is always the activeFormat. As you can see here, I've put a yellow box around the one that is active. It happens to be the 12-megapixel 30 FPS version. And if that activeFormat, the f format, happens to support Display P3, then there's a new property that you can set called activeColorSpace. And if the activeFormat supports it, you get wide color flowing from your source to all outputs in the session.

That was longwinded, but what I wanted you to take home from this is hopefully you don't have to do any of this. Most clients will never need to set the activeColorSpace directly and that's because AVCaptureSession will try to do it for you automatically.

So in iOS 10, AVCaptureSession has a new property that's long, automaticallyConfigures CaptureDeviceForWideColor.

When does it want to choose wide color for you? Wide color, in iOS 10, is only for photography. Let me say that again.

Wide color, in iOS 10, is only for photography, not for video. I'll explain why in a minute.

It can automatically, the session can automatically choose whether to configure the whole session configuration for wide color. It will set the activeColorSpace of your device on your behalf to P3, depending on your config.

You have to have a PhotoOutput in your session for this to happen. If you don't have a PhotoOutput, you're obviously not doing photography, so you don't need wide color. There are some caveats here. Like, if you start adding other outputs to your session, maybe it's not as clear what you're trying to do.

If you add an AVCaptureVideoPreviewLayer, the session will automatically still pick Display P3 for you because you're just previewing and also doing photography. If you have a MovieFileOutput and a PhotoOutput, now it's ambiguous. You might really care more about movies, so it will not automatically pick Display P3 for you. VideoDataOutput is a special case where we deliver buffers to you via a callback and there, the session will only pick Display P3 if you're using the photo preset. It's pretty sure if you're doing that that you mean to do photography stuff with those display buffers.

If you really, really want to, you can force the capture device to do wide color and here's how. First you would tell the session stop automatically doing that thing for me. Get out of my way.

And then you would go to the device and set the active format yourself to a format that's supports wide color. And then you would set the activeColorSpace to P3.

Once you do this, wide color buffers will flow to all outputs that accept video data. That includes VideoDataOutput, MovieFileOutput, even the deprecated AVCaptureStillImageOutput.

So while you can forcibly set the device's activeColorSpace to display P3, I want to strongly caution you against doing it unless you really, really know what you're doing.

The reason is wide color is for photos because we have a good photo ecosystem story for wide color, not so much for video.

So the main worry with Display P3 content is that the consumer has to be wide color-aware or your content will be rendered as sRGB, and the colors will all look wrong. They'll be rendered badly.

Most video playback services are not color-aware. So if you store a wide Display P3 movie and then you try to play it back with some service, it will likely render the colors wrong.

So if you do choose to do this, make sure that your VideoDataOutput is color-aware. That it's propagating the color tags. That it's doing something sensible. That it's color-aware.

And if you do choose to capture Display P3 movies using the MovieFileOutput, just be aware that they may render incorrectly on other platforms. This is -- we do allow this, though, because we recognize that it's important for some pro workflows to be able to do wide color movies as well.

So now dire warnings out of the way, I can tell you that we do have a very good solution for photos in sharing wide colors.

We should be aware that wide color JPEGs use a Display P3 profile and consumers of these images also need to be color-aware. The good news is photo services, in general, are photo color -- they are color-aware these days. iCloud Photo Library is one of them. It can intelligently convert your images to sRGB on devices that don't support wide color, but store the nice wide color in the cloud. We're also an industry in transition right now, so some photo services don't understand wide color, but most of them at least are smart enough to render it as sRGB.

For mixed sharing scenarios, like say sending a photo via Messages or Mail. You don't know where it's going. It might be going to multiple devices. Some of them might support wide color. Some might not. So for this situation, we have added a new service called Apple Wide Color Sharing Profile.

Your content can be manipulated in a way that we generate a content specific table-based ICC profile that's specific to that particular JPEG.

And what's nice about it is if it's rendered by someone who doesn't know about wide color, the part that's in the sRGB gamut renders absolutely correctly. The extra information is carried in the extra ICC profile information in a way that they can recover the wide color information with minimal quality loss. You can learn more about how to share wide color content in sessions 505 and 712. Both of those are on Thursday. I've talked about the first one three times now. The working with wide color one is also an excellent session.

On iPad Pro 9.7, the AVCapturePhotoOutput supports wide color broadly. It supports it in 420f, BGRA and JPEG, just not for 420v. So if you have your session configured to give Display P3 but then you say you want a 420v image, it will be converted to sRGB. Live Photos support wide color. Both the still and the movie. These are special movies. This is part of the Apple ecosystem. So those are just going to be wide color.

And bracketed captures also support wide color, too.

Here's an interesting twist. While I've been talking about iPad Pro, iPad Pro, iPad Pro, we support RAW. And RAW capture is inherently wide color because it has all of those extra bits of information. We store it in the sensor primaries and there is enough color information there to be either rendered as wide or sRGB. Again, you're carrying the ingredients around with you. You can decide later if you want to render it as wide or sRGB. So shooting RAW and rendering in post, you can produce wide color content on lots of iOS devices, not just iPad Pro.

As I just said, you can learn more on wide color, in general, not just sharing but all about wide color. The best session to view is the working with wide color one on Thursday afternoon.

Use AVCapturePhotoOutput for improved usability.

And we talked about four main feature areas today. We talked about capturing Live Photos in your app, RAW, RAW + JPEG, DNG.

Nice little preview images for faster rendering.

And wide color photos.

And believe it or not, one hour was not enough to cover everything that we wanted to cover. So we've done an addendum to this session. It's already recorded. It should already be online.

It's a slide plus voiceover thing that we're calling a Chalk Talk. And it tells you about in-depth topics that we didn't have time for. Scene monitoring in AVCapturePhotoOutput.

Resource preparation and reclamation. And then an unrelated topic, changes to camera privacy policy in iOS 10. So please take a look at that video. It's about 20 minutes long.

More information. All you need to remember is the 501 at the end. Go to that and you'll find, I believe, seven pieces of sample code as well as new documentation for AVCapturePhotoOutput. The documentation folks have been working very hard and they've documented the heck out of it. And here are the related sessions one more time.

The one that is the Chalk Talk, we're calling AVCapturePhotoOutput beyond the basics. And you can look at that any time you want. All right. Have a great rest of the show, and thank you for coming. [ Applause ]

-