-

DriverKitによるPCIおよびSCSIドライバのモダナイズ

コードをカーネル外に置き、DriverKitを使用して安全で信頼性の高いアクセサリエクスペリエンスを顧客に提供しましょう。PCIデバイスやSCSIコントローラをサポートする低レベルドライバの作成方法をご紹介します。また、macOS Big Sur上でDriverKitを使用して優れたパフォーマンスを実現する方法もご覧ください。

リソース

関連ビデオ

WWDC22

WWDC21

WWDC19

-

このビデオを検索

Hello and welcome to WWDC. Hi my name is Kevin and I'll be joined later by my colleague Madhu. Today we'll be going over what's new in DriverKit. First I will give a brief recap of System Extensions with DriverKit. Then I'll talk about the new PCIDriverKit framework. Then I'll hand it over to Madhu who'll be going in-depth on writing a SCSI Controller driver using PCIDriverKit and the new SCSIControllerDriverKit framework.

Then she'll be showing us a demo of these new frameworks in action.

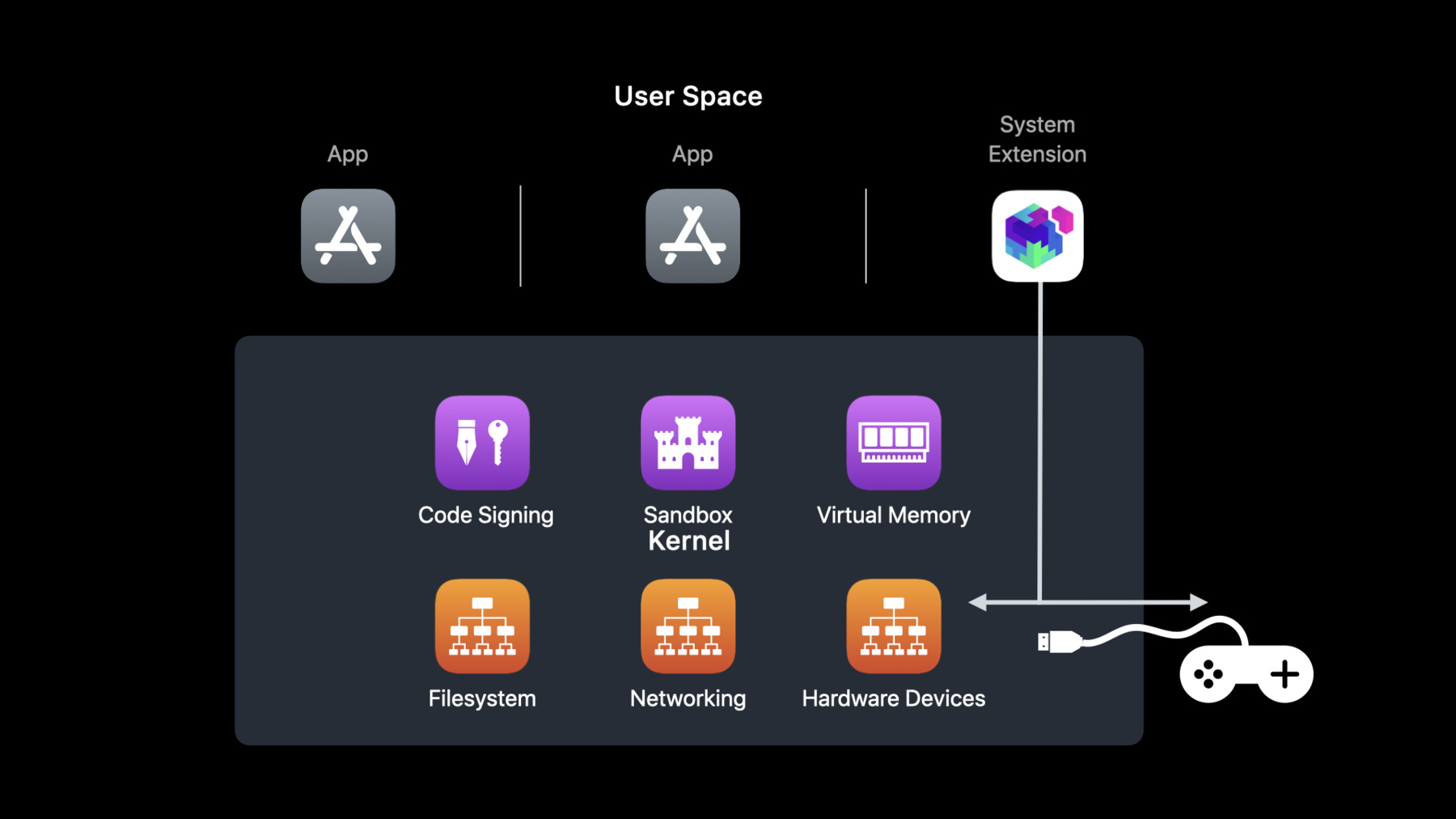

Finally I'll wrap things up with a summary of everything that we went over today. Last year we introduced DriverKit, a replacement for IOKit device drivers. DriverKit brought a new way to extend the operating system that is more reliable, more secure, and easier to develop. Driver extensions allow developers to make powerful and innovative apps without the pitfalls of writing code that runs in the kernel. For an in-depth look at DriverKit and how it works please check out last year's video titled System Extensions and DriverKit on the Apple Developer website. DriverKit System Extensions run in user space, not kernel space. Like other apps they have to follow the rules of the system security policy. Unlike other apps, System Extensions are granted special privileges to do special jobs. For example they have direct control of specific hardware devices or special APIs to interface directly with the kernel. Because DriverKit drivers are in user space, their access is limited and the resources are separate from the rest of the systems resources. When a driver extension crashes, the kernel and the rest of the system keep running. The development cycle is much faster. We can build, test, and debug on one machine whereas kernel extensions bring along security implications — a bug in a kext can compromise the entire kernel. A kext has access to all of the computers resources, not just a specific piece of hardware or resource. When a kernel extension crashes, the kernel panics and takes down the entire machine. Debugging and development can be slow. We need multiple machines and we'll need to reboot on every crash. Another great benefit to driver extensions is installation is much easier. Your driver extension stays in your app bundle. You should not copy your driver extension directly to any system folders. To install your app you'll use SystemExtensions framework and create an activationRequest, to request the extension to be made available to the system. A system administrator would need to approve this request. Most apps should create an activationRequest during app launch so the extension is made available right away. However, you may wish to activate your System Extension at different points in your app's lifecycle such as after the user has agreed to a license agreement, or made an in-app purchase if that's required for your system extension.

You can get started writing DriverKit drivers right away before they're ready to go into the App Store without a provisioning profile. To do this, you'll need to disable system integrity protection, sign your dexts to run locally, and be sure to include all the entitlements needed for your driver.

Last year when DriverKit first launched, there was support for USB, serial, network interface controllers, and human interface devices. Then later PCI support was introduced. And now SCSI Controller support has been added as well.

Because DriverKit brings so many great improvements over kernel extensions, we recommend migrating to DriverKit immediately for available frameworks.

Catalina was the last released to fully support kexts without any compromises. We've begun deprecating kernel extensions that can perform the same function as a system extension including the device families supported by DriverKit. In macOS Big Sur, kexts that were deprecated in macOS Catalina will not load by default. We will continue to add more types of system extensions and more device families to DriverKit. In turn, kernel extensions of these kinds will also be deprecated. This year we have added support for PCI and SCSI Controller drivers. This means kext support is now deprecated for PCI and SCSI controller device families, for kernel extensions that can perform the same function as a system extension. This also means PCI and SCSI controller kexts will not load by default in a future macOS release.

Now we'll talk about one of the exciting new additions to DriverKit: PCIDriverKit. I'll show you some of the key differences and driver models between PCI driver extensions and kernel extensions. Then I'll be showing some code examples almost every PCIDriverKit driver will need. PCIe is used to expand the capabilities of a machine as though they are built into the system. PCIe brings a lot of advantages as it can handle the most demanding high performance jobs. Some common PCIe devices include serial, Ethernet, and SCSI controllers.

Users can add PCIe devices through Thunderbolt or through PCIe slots such as in the latest Mac Pro. As with all driver extensions PCIDriverKit requires entitlements to grant access to your PCI devices. This is to ensure another app or malicious software can not access your PCI device. The new entitlement uses the same IOPCIFamily driver-matching criteria as the Info.plist. However you can make the entitlements more generic if you need to support multiple devices. In this example, I have an entitlement that will allow you to access any device with a provided vendor ID. In this case we're going to use ABCD. The PCI entitlement takes a list of PCI matching dictionaries. You can be as specific or generic as you want by using the IOPCIFamily matching categories.

In this case we're doing a primary match which means we'll be comparing the primary vendor ID and device ID of the device to the entry that we have in our dictionary.

By using the ampersand we can have a mask that allows the matching dictionary to be more generic. This effectively removes the device ID from the matching dictionary allowing the driver extension to be entitled for all devices of this vendor ID.

With PCIDriverKit you will likely need to interact with one of the many other DriverKit frameworks. This is an example of what your entitlements will be if you wanted to write a custom Ethernet NIC driver. In this case you'll add the networking family entitlement. With this family entitlement, you can now use the Networking DriverKit framework to interact with a networking stack as well. With PCIDriverKit the only class in the framework is the IOPCI device. This class is not intended to be subclassed. You'll use this class as the PCI provider to access all of your PCI resources. Here's what your driver extension may look like using our network interface controller example.

The user space IOPCIDevice will do the PCI-related communication needed with the kernel. Your driver will use the IOPCI device for any PCI resource access.

Your driver can then use the PCI device resources to talk to the networking family and other user clients. The layering structure for the driver extension is mostly the same as the kernel extension, however almost everything is user space. While encouraged in kernel extensions, PCIDriverKit mandates that prior to accessing the PCI device you must successfully call Open on the device. Once Open return successfully, your driver has exclusive access to the PCI device. If your device has resources that are intended to be shared by multiple clients, you will need to design your driver extension in a way that has a single PCI resource manager that distributes its resources amongst all of its clients. This is to enforce good design and allows the system to handle driver extension crashes more gracefully. When done using the device such as during the driver stop routine, You'll need to call Close on the device. You should not expect the state of the device to remain after calling Close. For instance the PCI stack will disable bus mastering and memory space once Close has successfully completed. Another driver may open the device and change its state if it's entitled to do so. Another difference between PCI kernel extensions and PCIDriverKit is that PCIDriverKit framework takes care of all the device mappings for you. There are new APIs that all memory accesses will use. This allows developers to take advantage of any future optimizations Apple may explore. It removes the need for any device memory management and will also allow more observeabiltty and debuggability. With a single point of access You can now use programs such as dtrace to observe all device memory accesses. This can be especially helpful when debugging PCI issues in a multi-threaded environment. The APIs work by passing a memory index of the memory space you wish to access along with the offset. I will go over what the difference between a memory index is versus a base address register index now. This is a diagram of the PCI device's base address registers or BARs. For 32-bit BARs, the memoryIndex and the BAR index are the exact same. For example with 32-bit addresses, if you want to access BAR2 you'd use 2 as the memoryIndex because it's the same index into the device's BAR space. 64-bit BARs are where the memoryIndex and BAR index can differ. The memoryIndex is the index for each memory entry. All memory indexes are base zero.

So, for example, if BAR0 and BAR1 combined are one 64-bit address, your memoryIndex would be 0 because it's the first memory entry. Then if BAR2 and BAR3 are another 64-bit address, they'd be at memoryIndex=1 because it's the second memory entry in the device's BAR table. Accessing configuration space of your device is mostly a one-to-one mapping and still works the same with just a new name. The major difference between PCIDriverKit and kernel extensions for configuration space is that you will first need to have an open session to read or write any configuration space registers. Next I'll be going over some examples of common code you'll likely need. First is how you enable bus mastering and memory space. These will be needed in order to access memory on your device, along with enabling your device to issue I/O. Previously there were explicit kernel APIs for each of these operations. Now, in order to achieve the same result, you'll first read the command register and write back to it with the bus master and memory space bit set. This example is what most PCI drivers will look like. In their Start routine, they will first start a session with the PCI provider. Then they will read the PCI device's command register. Set the bus master and memory enable bits, then write the values back to the PCI device. This will need to be done before issuing any memory reads or writes. All these offsets and definitions can be found in the PCIDriverKit framework headers. To disable bus mastering the processes are the same but instead of setting the bits, the driver will mask them out. In this example, Close is also called in the Stop routine. This means that we are done talking to the PCI device and can close our session. Now we'll be going over how to setup an interrupt handler. First we'll need to declare an InterruptOccured method in our iig header file. Then the definition of our interrupt handler will be using an implementation definition. In this example when we get an interrupt the handler will clear the interrupt by writing a register in MMIO space to one of the device's memory indexes. Now we need to do the setup to make it so our interruptOccurred method gets called when an interrupt fires. First thing we'll need to do is find out which interrupt index we want to use. To do this we'll iterate through all the interrupt indexes associated with the PCI device. Then we'll check its interruptType. In this example we want to handle the device's msiInterrupts. So that means we want to break out of the loop once we found the interrupt index that supports MSIs.

Then we'll need to choose which DispatchQueue the interrupt will be handled in.

For this example we're going to keep everything single threaded and use the default DispatchQueue. If you want to create a new DispatchQueue just for interrupt handling, that's supported as well.

Next we will create the dispatch source by specifying the interrupters for the PCI device at the interrupt index we found earlier, and our default DispatchQueue. Then we'll need to create an interrupt action for our newly created source to use to handle the interrupts. Once the source is enabled, our interrupt handler will get called when our device triggers an interrupt. Lastly we'll go over how to set up a DMA transfer for your PCI device. First we'll create a buffer memory descriptor. We'll need to set the length of the buffer prior to writing to the buffer. And then here's an example of how you can take the virtual address of our segment and write some data to it.

Next, in order to give the PCI device access to our buffer, we'll need to create an IODMACommand. First we'll set up the DMA specification that describes our hardware's DMA capabilities. Then we'll create the IODMACommand specifying this is for our PCI device. This is to ensure the correct memory mapper is used for all of your transfers.

Next we're going to take the DMACommand and prepare our buffer memory descriptor so we get a physical address to give to our PCI device. Then we can use the result of the physicalAddressSegment to get the buffer's physical address to write it to our PCI device. Once our transfer is finished we'll need to call Complete on our DMA command so that the physical memory can be used by another process or device. That was a brief introduction to the new PCIDriverKit framework. Now I'd like to hand it over to Madhu, who will be going into detail on how to write a SCSI driver using DriverKit. Hi, I'm Madhu and I'm going to be talking about the SCSIControllerDriverKit. framework. It's a brand new framework in DriverKit to build driver extensions for SCSI controllers. Let's start with an overview. SCSIControllerDriverKit framework is available today in macOS Big Sur. It supports all IOKit-based device-driver features that SCSI kexts had supported in the past. This framework is going to serve as a replacement for IOSCSIParallelInterfaceController implementation which was being used until now for kext development. The framework has been built keeping performance as a top priority. It is highly optimized to minimize interactions between the user space and the kernel in the I/O performance path.

It can support a variety of SCSI based devices like Fibre Channel controllers, RAID Controllers, serial attached SCSI, and more. Since these will be PCIe based controllers, we've made sure our framework is fully compatible with the PCIDriverKit framework.

IOUserSCSIParallelInterfaceController is a new class defined in the iig interface file. SCSI dexts are required to subclass this and implement its functions. Now we have tried to maintain similar API structure here compared to the kernel class, with the prefix "User" added to API names. For example, UserProcessParallelTask is the new API, dexts need to implement to submit I/O requests to the hardware. It is quite simple to determine which framework functions to override in the dext. Just override all the functions that are marked pure virtual. These functions are calls made by the framework into the dext to get controller specific information or to perform specific tasks. For example the framework calls UserDoesHBAPerformAutoSense to check if the HBA supports auto-sense. It calls UserInitializeController to initialize the controller and dext data structures. All non pure virtual functions get invoked by the dext to perform specific tasks like creating a SCSI target with a unique ID, or setting target properties for a specific target. The implementations of these functions will be present in the kernel. Like we already know, entitlements are required to be added before we build and load driver extensions. Other than the generic DriverKit entitlement and the transport-specific entitlement, we have added a new family entitlement for SCSI controllers. A SCSI dext needs to add this entitlement to run and have full access to the framework. With that, let's create an example SCSI dext. I have divided this into four important phases. In each phase, I will show how to override some important functions, discuss some guidelines, and share some performance tips. Let's begin with start and initialization. The kernel is going to control the start and initialization phase of the dext by making a whole bunch of calls.

Other than making calls to initialize the controller, it is also going to be making a number of calls to gather controller specific information like maximum task count supported, highest supported device ID, etc, and sets up all the kernel data structures accordingly. Let's see what happens when a SCSI controller device is plugged in. IOKit matching kicks off and an IOService object of the type IOUserSCSIParallelInterfaceController starts up in the kernel. IOKit's IOService implementation will now start creating resources required to start the dext in the user space.

It initializes the IOUserServer IPC layer and creates a process hosting the dext with its DriverKit classes initialized. DriverKit SCSI device is loaded and IOUserSCSIParallelInterfaceController will first call its Start implementation. It will then call a whole bunch of other functions like UserInitializeController and UserStartController to finish the Start and initialization phase.

Let's start implementing. I'm only going to be discussing some important framework functions that the dext needs to implement. The first one being UserInitializeController. We are first going to be opening a new session with PCIDriverKit like Kevin discussed before. The DriverKit framework already creates a default DispatchQueue for us. We are going to be creating and setting up two additional DispatchQueues here. We are also going to create an InterruptDispatchSource and register a handler to process interrupts.

This brings us to the queuing model we would like you to follow while building your dext. We suggest having three DispatchQueues in total — the Default Queue, which is created for you by the DriverKit environment, additionally an Interrupt Queue and an Auxiliary Queue. Default Queue will run all the calls that originate from the kernel — for example, UserInitializeController, UserProcessParallelTask, and so on. It helps to have a separate queue to service interrupts. Since DriverKit dispatch queues are serial, interrupts and I/Os won't be competing with each other to run.

This will give a huge performance boost as well. This queue can also be used to service dexts' IOTimeoutHandlers. A separate Auxiliary Queue needs to be setup to create SCSI targets. As part of creating targets, kernel makes a number of calls to the dext to help initialize the target.

Since these calls will run on the Default Queue the dext needs to create the SCSI target from a separate Auxiliary Queue. The kernel is going to be maintaining a list of IODMACommands, SCSIParallelTask in this case, based on the total outstanding commands the controller can support at a time.

Every time it creates a new SCSIParallelTask object, it calls UserMapHBAData implementation of the dext. The dext can use this opportunity to create its controller-specific taskData structure. And maintain a list of them. Here are some implementation details of my example SCSI dext.

I have created an idle buffer memory descriptor object for my controller- specific taskData structure. I have also mapped the buffer memory descriptor in my dext's address space. It is important to do any kind of pre-processing like this here before we start serving I/Os. Doing this in the I/O path can negatively impact performance. I add this task data to my taskData list. I also assign a unique taskID to this task so that the kernel can correlate this with its corresponding SCSIParallelTask object. Next, in my UserStartController implementation I enable interrupts on the hardware. I then enable the interrupt dispatch source. Returning Success from UserStartController indicates to the kernel the dext is up and ready to serve I/Os.

That brings us to the most interesting part of any storage driver, the I/O path.

Let's first refresh our memory a little and review how this works in the kernel today.

Before, the kext would override the function ProcessParallelTask and would have access to SCSIParallelTask object. SCSIParallelTask is a subclass of IODMACommand. The kext was responsible to prepare the IODMACommand and generate physical segments for DMA to happen. It would call CompleteParallelTask once it receives the interrupt completion for the I/O. We have changed a whole lot of this behavior with the dext. The kernel will now call the dext's UserProcessParallelTask implementation to submit an I/O.

As part of this call, it'll send a copy of SCSIUserParallelTask object to the dext.

This object will contain all the information the dext will need to perform this I/O, and the dext will not have to make any more calls to the kernel.

Like I mentioned in the beginning, this framework has been built keeping I/O performance as a top priority. Note that the dext does not have access to SCSIParallelTask object. The kernel will do all of the heavy lifting of preparing the IODMACommand and generating physical segments for the dext.

This way of letting the kernel handle all of the operations required to prepare an I/O for DMA, has obvious performance benefits. Preparing an IODMACommand in the user space will result in additional IPC calls being made to the kernel and we would want to avoid that at any cost in the performance path.

After the I/O is submitted, dext's interrupt handler gets invoked whenever the hardware sends an interrupt completion. It will then have to call ParallelTaskCompletion which is an OS action callback to complete this I/O.

Here are the two main functions we need to care about. The dext needs to implement UserProcessParallelTask function for I/O submission, and needs to call ParallelTaskCompletion to indicate I/O completion. We already discussed about SCSIUserParallelTask a little. As we can see it contains a lot of information about an I/O like the CDB, data transfer count, data transfer direction, etc.

An important field in this structure is fBufferIOVMAddr. Like I mentioned before the kernel generates DMA physical segment for us, fBufferIOVMAddr is going to be the start physical address of this segment. It is guaranteed that the kernel is going to generate one long contiguous segment that can fit all of the buffer data.

The dext needs to send this response object as part of ParallelTaskCompletion callback.

It needs to have all the relevant information for the specific I/O completion, like completion status, number of bytes transferred, etc. And sense data, If any.

Let's look at our example implementation. I first fetch the specific taskData for the taskID from my list. I set all the metadata for this I/O in my taskData. The kernel is going to generate one long physical segment with fBufferIOVMAddr as the start address. Feel free to prepare your own ScatterGatherList if your hardware specification requires you to chop this up into multiple segments. I retain and stash away the completion callback object for later. I post a request to the hardware, and send an appropriate response back to the kernel. Once I receive an interrupt completion, I process the interrupt response and fill out the SCSIUserParallelResponse structure.

I then invoke the completion callback. We now have I/O submission and completion logic implemented in our example dext. Let's move on to the next phase of our driver development: power management. Supporting power state transitions in DriverKit is as simple as overriding SetPowerState function and implementing your hardware-specific functionalities for every power state supported. DriverKit framework today supports three power states: an off state an on state, and a low-power state. For PCIe-based devices we need to mainly care about the off and the on states. Off state for when device is entering sleep state, like during system sleep or even doing a PCI pause operation.

And on state for when device is fully powered on, called during System Wake. Delayed acknowledgement of power state transition is also possible in DriverKit. Let's look at an example. Here I have overridden SetPowerState and to keep it brief I have only shown PowerStateOn transition. For my hardware I'm going to be issuing a hard reset to change the power state to on. I then return the call back to the kernel. Since I issued a hard reset my driver starts a complete SCSITargetRescan on the hardware. I make sure all my targets are up and then acknowledge the power state transition by issuing a SUPERDISPATCH SetPowerState call. I now have power management support implemented in my example dext. Let's finish by discussing termination. DriverKit termination is quite straightforward. Just override Stop method and tear everything down in there. We have made it simpler for you.

The dext is not responsible for completing any outstanding I/O requests.

The kernel handles all of that for you. Let's look at an example. In my Stop implementation I first close my outstanding PCI session. I then cancel all of my dispatch queues and sources. I release them in a finalize code block which gets called by the framework once the Cancel call completes. That's it! We now have all the major features of our example SCSI dext implemented.

Now I know what you all must be thinking — here we have a system where every single I/O that is coming down from the file system in the kernel gets sent to the dext running in the user space via an IPC call. The interrupt handler is getting invoked via an IPC call from the kernel.

And the dext I/O completion is sent from user space back to the kernel via an IPC call. This sounds like a lot of overhead. Will this affect my dext's performance? Will my dext be able to achieve the same throughput as my kext? Can I still run pro workloads on these devices like I used to? These are all great questions. We had the same concerns when we started developing this framework. Like I have mentioned a couple of times already, we designed and implemented this framework keeping performance as a top priority.

I'm happy to report that SCSIControllerDriverKit can handle the same workloads as kexts. Let's run a realistic workload on our example SCSI dext and see how it performs. My device setup includes a four gigabits- per-second Fibre Channel host bus adapter connected to a RAID disc array. I have connected this setup to a MacBook Pro here via Thunderbolt 3.

To build and install my example SCSI dext I made sure I added all the required entitlements and packaged it inside a System Extension app. I also made sure my System Extension app created an activation request during app launch so that my dext is available right away. I then built my System Extension app and code signed it appropriately. I have already installed my System Extension app on this system.

Let's peek into the I/O registry and see if our dext has matched. There it is: our example SCSI dext has started successfully and is running. Our Fibre Channel controller in this case is actually a multifunction PCI device, so we see two separate instances of ExampleSCSIDext running. Let's open Disk Utility to see if our dext is providing any disks. Looks like we have one external disk here with a Fibre Channel interface. The disk is formatted to have one APFS volume, the volume is already mounted as we can see here on the Desktop. We thought it would be a great idea to demonstrate performance by running an actual pro workload. I have a Final Cut Pro library on this external SCSI disk, let's open that.

Video processing and playback can be CPU and disc intensive. While serving I/Os if our dext's CPU utilization increases for any reason it is going to show. Our dext process can steal CPU time from the actual video playback which would result in frame drops. Our goal here is to make sure our dext is able to stream this video without any frame drops. Just to be sure we'll see if this happens, I'll enable this option here so that we get warned about frame drops because of disc performance. Let's start playing the video.

I'm also going to open Activity Monitor on the side so we can see disk usage.

I have already selected the Final Cut Pro process here. We have this beautiful video playing in Apple ProRes format. This video has a maximum disc throughput of around 350 megabytes per second which is quite close to the maximum throughput that our four gigabits-per-second Fibre Channel controller can support. Our dext is doing great so far.

This entire video was streamed from our example SCSI dext running in user space. How cool is that? And we did not get alerted of any frame drops, which means our dext was able to handle this pro workload like a pro. There we have it. All the advantages of DriverKit like security, ease of development, and debugging, without compromising on performance. So that was a brief overview of SCSIControllerDriverKit framework. A brand-new framework to build driver extensions for SCSI controllers. Back to you, Kevin. Thanks, Madhu.

Today we briefly went over DriverKit System Extensions and saw how you can adopt the new PCIDriverKit and SCSIControllerDriverKit frameworks for your devices. We went over an in-depth example of writing a SCSI controller driver over PCI and got to see a real-world demo of how you can use these frameworks. With the new SCSI controller and PCIDriverKit frameworks, SCSI controller and PCI kernel drivers are now deprecated when a System Extension can perform the same function. For an in-depth look at DriverKit, please check out last year's System Extensions and DriverKit session on the Developer website. I encourage developers to start adopting DriverKit immediately for the device families that are available.

Please go and download the latest DriverKit SDK and let us know any feedback you have through Feedback Assistant. We'd love to hear from you and are excited to see what you'll create.

-