-

Apple GPUとMetalの併用

Apple GPUの能力と、AppleプラットフォームでのGPUアクセラレーテッドグラフィックスの最新基盤であるMetalを組み合わせ、視覚的に優れた高機能のAppやゲームを作りましょう。Apple GPUのアーキテクチャと機能、そしてMetalがそのTile-Based Deferred Rendering(TBDR)アーキテクチャを活用してAppやゲームの性能を大きく向上させる仕組みをご覧ください。本セッションでは、Apple GPUの効率性について説明し、最新のレンダリング技術のアレイにTBDRを適用する方法をご紹介します。

本セッションの前に、Metalとグラフィックスレンダリングに関する基礎知識を身につけておくことをお勧めします。まずは“Modern Rendering with Metal”をご覧ください。リソース

関連ビデオ

WWDC23

WWDC21

Tech Talks

WWDC20

- GPUカウンタによるMetalのAppやゲームの最適化

- MetalでのGPU側のエラーのデバッグ

- MetalをApple Silicon Macでも

- Xcode 12によるMetal Appのインサイトの取得

WWDC19

-

このビデオを検索

Hello and welcome to WWDC.

Hello. I am Guillem Viñals from the Metal Ecosystem team at Apple. Today we will talk about our unique GPUs. This is a talk about hardware so there is not a single line of code, but plenty of pipelines instead. And, as we all know, pipelines are cool.

This talk has two parts. First, we will talk about Apple TBDR GPUs, which will cover the basics of the rendering pipeline. Then we will talk about modern Apple GPUs, which cover some of the recent enhancements to the pipeline.

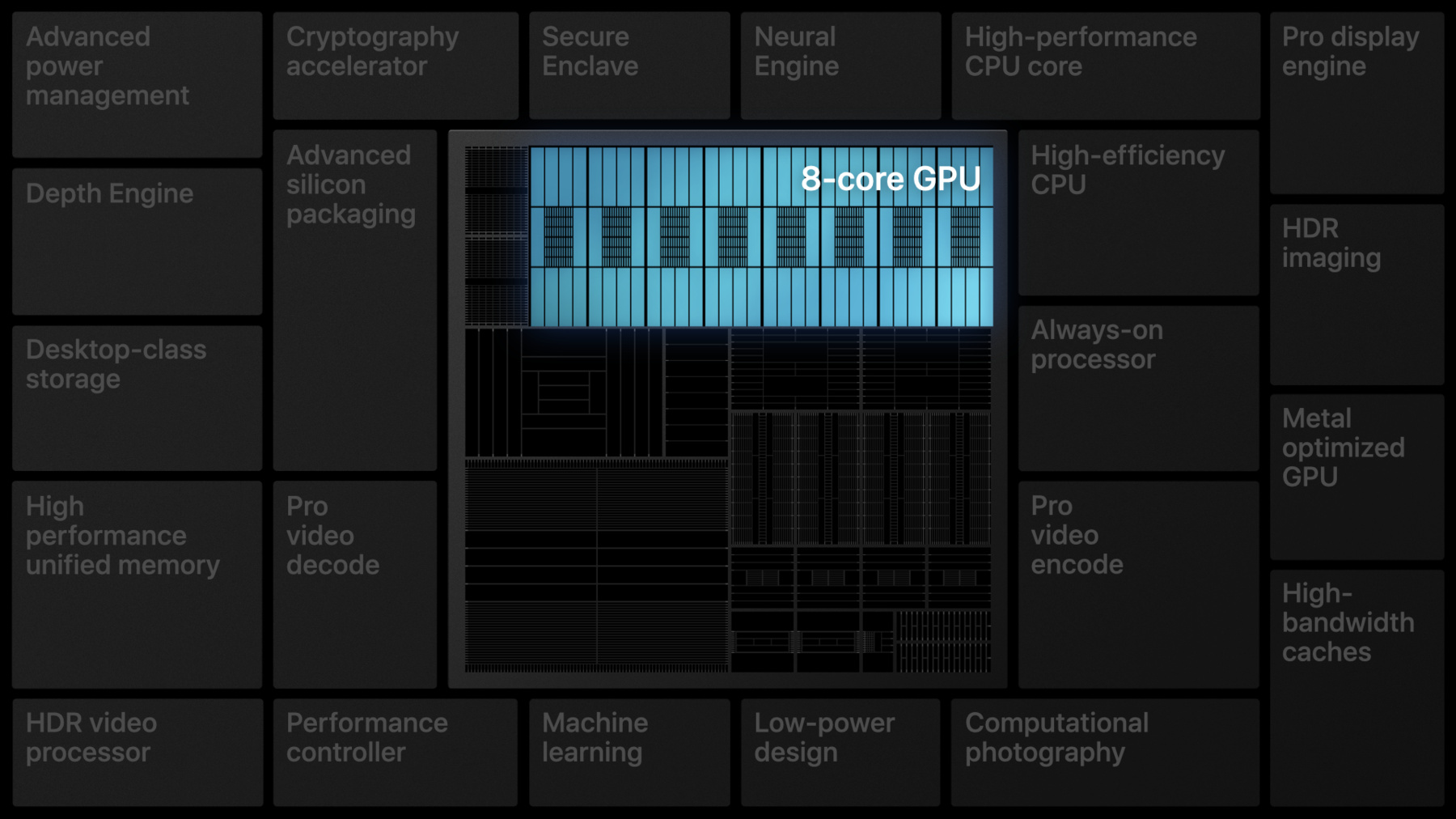

So why are GPUs so important? Well, they are at the heart of our A Series processors. Our Apple processors are powering over one billion devices worldwide. So that's a lot of Apple GPUs.

Apple processors are very power efficient, and they have this unified memory architecture, which means that the CPU and the GPU share System Memory. Also, the GPU has a dedicated pool of on-chip memory, which we call Tile Memory.

Notice, though, that the GPU does not have video memory. So bandwidth could be a problem if the content has not been tuned.

In order to be fast and efficient without video memory, our GPUs have a unique architecture known as TBDR, or Tile Based Deferred Renderer. So let's talk about that. Today, we will review the rendering pipeline, as well as some of the features that make the GPUs so efficient. We will talk about Vertex and Fragment stage overlap, Hidden Surface Removal, Programmable Blending, Memoryless Render Targets, and also our very efficient MSAA implementation. So let's have a look at the graphics pipeline to understand this a bit better.

Our GPUs are TBDRs, or Tile Based Deferred Renderers. TBDRs have two main phases. First, tiling, where all of the geometry will be processed. Second, rendering, where all of the pixels will be processed. So let's cover this pipeline more in detail, starting with the Tiling Phase at the very beginning. In the Tiling Phase, for the entire render pass, the GPU will split the viewport into a list of tiles, shade all of the vertices and, finally, bin the transformed primitives into a list of tiles. Remember, our GPUs don't have a large pool of dedicated memory. So where does all this post-transform data go? It goes into the Tiled Vertex Buffer. The Tiled Vertex Buffer stores the Tiling phase output, which includes the post-transform vertex data, as well as other internal data. This data structure is mostly opaque to you.

But it may cause a Partial Render if full. A Partial Render is when the GPU splits the render pass in order to flush the contents of that buffer.

So the takeaway is for you to know that the Tiled Vertex Buffer exists, but don't worry too much over it.

Okay. So far, so good. Let's move on to the second stage of our GPU pipelines, rendering. This is where most of the heavy lifting happens and it's also where the TBDR architecture shines the most.

In the previous stage, the GPU split the viewport into a list of tiles.

Now we are going to shade all of these tiles separately.

In the rendering phase, the GPU will, for each tile in the render pass, execute the load action, rasterize all of the primitives and compute their visibility, shade all of the visible pixels and, finally, execute the store action.

Most of this is also opaque to the application, it just happens to be very efficient.

What the application can control very explicitly are the load and store actions. So let's review those to make sure you got them right.

Load and store actions are executed for each tile. At the beginning of the pass, where we tell the GPU how to initialize Tile Memory, and at the end of the pass, to inform the GPU what to do with the final render.

The recommendation is to only load the data that you need. If you don't need any data, don't load anything and clear instead. This will save us all the memory transfers required to upload all the color attachments, as well as depth and stencil buffers.

The same goes for the store action. Make sure to only store the data that you need, like the main color attachment. This is very important to improve the efficiency of your render. So let's look at the rendering phase in a bit more detail, starting with Hidden Surface Removal, or HSR, which happens before we render anything.

This is possible thanks to the on-chip depth buffer.

This is also a key aspect of TBDR.

HSR allows the GPU to minimize overdraw by keeping track of the frontmost visible layer for each pixel.

HSR is both pixel perfect and submission order independent.

So pixels will be processed into two stages, Hidden Surface Removal and Fragment Processing.

For example, even if you draw two triangles back to front, HSR will ensure that there is no overdraw.

So let's assume we want to render three triangles, submitted back to front. First, the blue triangle at the back. Second, orange triangle in the middle. And, finally, a purple translucent triangle in the front.

HSR will keep track of visibility information. Each pixel will keep the depth and primitive ID for the frontmost primitive. Zero, in this case, means that the background color should be used. We have not yet rasterized anything.

We will rasterize the first primitive, the blue triangle.

This primitive will populate the depth ID for all of the pixels covered.

Notice that so far we haven't run any fragment shader yet.

Then we will rasterize the second primitive and to exactly the same.

Since the orange triangle is in front, we update the depth and primitive ID of all of the pixels it covers.

Again, we rasterize the primitive without running any fragment shader.

The GPU will first calculate the visibility for each pixel before shading anything.

Now we will rasterize the translucent triangle.

HSR does only keep the frontmost visible primitive ID for each pixel. But now we have a primitive that needs to be blended. At this point, the HSR block will need to flush the pixels covered by the translucent primitive.

In this context, flushing means running the fragment shader for all of the pixels covered.

The GPU cannot defer fragment shading any longer.

Once the pixels have been flushed, the GPU can now process the blended primitive correctly.

This was the last primitive, so the remaining pixels in the Visibility Buffer will also need to be flushed.

At the end of the pass, all of the pixels are shaded.

Notice, though, that some pixels have only been shaded once. In spite of potentially having multiple primitives overlapping, these pixels have no overdraw. In this context, overdraw is the number of fragment shader invocations per pixel, and we want to keep it low.

On a scene with non-opaque geometry, overdraw will depend on the HSR efficiency. So you will want to maximize that. You will want to draw the meshes sorted by visibility state. First, opaque. Second, alpha test, discard or depth feedback. And third, and finally, translucent meshes. You should avoid interleaving opaque and non-opaque meshes and also avoid interleaving opaque meshes with different color attachment write masks. Maximizing the efficiency of HSR will help us reduce overdraw.

So let's review these with an example. This time we want to draw the same triangles as before, but with a different order. The translucent triangle is now in between the other two.

If we just submit back to front, we will mix different visibility states. In this case, interleaving opaque and non-opaque primitives is inefficient. HSR will need to flush all of the pixels covered by the translucent primitive. Many of these pixels shaded will soon be occluded by the next primitive and then shaded again.

To maximize efficiency, render all of the opaque geometry first. We don't actually need to sort opaque meshes. As long as we submit them before the non-opaque meshes, HSR will be very efficient.

After HSR, we move on to the actual Fragment Processing stage.

Notice, though, that if we discard or update the depth, we will need to go back to HSR.

This stage is very efficient thanks to the on-chip frame buffer.

Alpha Blending will always happen on Tile Memory.

Also, notice that there is no dedicated blending unit.

The only time while we read or write any render target is when the load and store actions get executed. In this example, in spite of blending, the final render target gets written only once at the end of the pass.

Sometimes, conventional Alpha Blending may not be usable though.

This is the case for some full screen effects such as global fog or deferred lighting, which requires some custom blending.

These algorithms are traditional multi-pass techniques. Many of these techniques will store all of the attachments just to sample from them in a second pass.

TBDR GPUs expose a feature called Programmable Blending. Programmable Blending allows fragment shaders to access pixel data directly from Tile Memory.

This allows you to merge multiple render passes into one and drastically improve the memory bandwidth. In this example, we don't need to load or store any intermediate render target.

But there is still some waste in here.

There are a couple of render targets which are not being loaded or stored. They are just being used within the Tile Memory. And those are potentially big textures taking a large chunk of your memory footprint.

TBDR's GPUs have a solution for that, Memoryless Render Targets. We can explicitly define a texture as having memoryless storage mode.

This gets rid of all of these unnecessary allocations, saving us precious memory footprint.

There's another technique which fits this design very well. But let's talk about the problem first. Aliasing. Aliasing, or Jaggies, are the stair-shaped pattern that we may see sometimes in games.

Aliasing is a common artifact of rasterization. The GPU will sample the pixel at the center and only shade the primitive if it intersects with a sample point.

Multisample Antialiasing is a very common technique that consists of rasterizing multiple samples per pixel.

This allows rasterization to occur at higher resolution.

The pixel shader is run once per triangle per pixel and blended with the rest of samples. This will smooth out the edges of a primitive.

Apple GPUs have a very efficient MSAA implementation.

Apple GPUs will track the primitive edges, so pixels without edges will blend per pixel, and pixels that contain edges will blend each sample.

But that is not all.

The multiple samples are stored on-chip Tile Memory and resolve once the tile is flushed.

This makes the store action very efficient, and you can also use memoryless storage mode to save some footprint. So let's review this.

Trivially, this is how it would look. We have a large multisample texture, as well as a Resolve Texture. At the end of the pass, we store the multiple samples and resolve.

By using efficient Resolve action, we can save all of the bandwidth required to store the large multisample texture. That is because MSAA Resolve always happens from Tile Memory. So there is no point in storing the samples...

which makes the multisample texture fully transient. We never load it or store it. This means that we may as well make it memoryless and save all that footprint too.

Awesome! So now let's take a step back.

Thanks to the efficient load and store actions, Tile Memory and memoryless render targets, we can write some awesome renders which are impressively efficient. Another characteristic of Apple TBDR GPUs is that the tiling and rendering are separate stages for each render pass.

This allows the tiling work of a render pass to overlap with the rendering work of a previous pass. And of course, if there is no dependencies, Compute will always overlap.

As you can see, Apple TBDR GPUs are great. And thanks to Metal, it's very easy to leverage the architecture.

Apple designed Metal to enable rapid innovations in GPU architecture. And in turn, the Apple GPU architecture has informed the design of Metal.

Metal is designed for Apple GPUs. It exposes a unified graphics and compute architecture as well as TBDR features such as Programmable Blending and Memoryless Render Targets. It also exposes an explicit submission model and explicit multi-threading.

This deep integration of hardware and software makes it possible to easily optimize advanced algorithms...

such as deferred rendering. Deferred rendering is a multi-pass algorithm that decouples rendering the scene properties from the actual lighting. In its most basic form, we can think of each of the algorithm stages as a rendering pass.

This is considered bandwidth-heavy because we need to write out and then sample a lot of data in order to produce the final lit render. This requires a lot of memory footprint to store these intermediate textures or G-Buffer. Of course, you know by now that by using Programmable Blending, efficient load and store actions and memoryless render targets, we can make this algorithm very efficient. Both in terms of memory footprint, but also in terms of memory bandwidth.

You can find a lot of documentation on the topic of deferred rendering and all of the TBDR features in the following samples. So go check them out. Check the Deferred Lighting sample code, which is actually written in multiple languages, and also optimized for Apple TBDR GPUs. Now, let's move on to the second part of the talk. Modern Apple GPUs.

Starting with A11, we introduced a major GPU redesign. We redesigned and rebalanced the full GPU in order to support more modern rendering algorithms. These algorithms may require us to explicitly leverage the Apple TBDR architecture, potentially manage complex data structures as well as greater numerical accuracy. Since we also rebalanced the design, we made several rate improvements as well as increased some implementation limits.

Cool! So let's review the render pipeline changes.

This is the TBDR pipeline we've just seen.

And this is the new TBDR pipeline of modern Apple GPUs. Most of the changes occurred here. We have now an on-chip Imageblock as well as a new programmable stage called Tile Compute. So let's review that, starting with the Imageblock.

An Imageblock is a 2D data structure in Tile Memory. It has width, height and pixel depth. It can be accessed by both fragment functions or kernel functions.

Using this structure explicitly also has some efficiency gains.

Prior to Imageblocks, you probably moved your textures into Threadgroup Memory one pixel at a time. But the GPU didn't understand that you were operating on image data. You also had to store the texture one pixel at a time. With Imageblocks, you can load and store image data using a single operation. This is much more efficient.

So far, so good. But the Imageblock makes more sense when we talk about Tile Shading.

Tile Shaders are the compute kernels that we can dispatch in order to access the Imageblock mid-render pass. Dispatches are interleaved with draw calls executed in API submission order. And they will barrier against earlier and later draw calls, so synchronization should not be an issue.

Tile Shaders and Imageblocks will also help us with MSAA.

MSAA also got more efficient On our modern GPUs. Now, the GPU also tracks unique samples.

But so far, MSAA is fairly opaque to your application. You could enable or disable it for a render pass with no control on how the samples are resolved.

Leveraging the flexibility of Imageblocks and Tile Shading, Imageblock sample coverage control gives you access to each pixel sample coverage tracking data for even more control of your multisample render passes. This allows you to leverage MSAA where you would have not before.

Some applications render complex scenes with lots of opaque geometry and lots of translucent geometry, like particles. This would have a negative impact on the performance due to the high number of small, translucent triangles which will have a lot of samples per pixel.

In this case, you may want to resolve your sample data with a tile pipeline before the heavy blending phase. With Imageblock Sample Coverage Control, you can resolve the sample data with a tile pipeline after rendering the opaque geometry to ensure that all of the pixels contain a single unique color.

Also, the resolve is fully programmable. You may actually want to implement a custom resolve for each type of render target, such as HDR color or linear depth.

Tile Shaders and Imageblocks add a great deal of flexibility to the GPU. They also help you leverage the TBDR architecture of our modern Apple GPUs.

You can learn more about Apple GPUs in the following Tech Talks. These Tech Talks also cover some great features we haven't talked about, such as raster order groups.

Now, let's switch back to Metal for a second.

Metal makes it possible to leverage the improved capabilities of Apple GPUs.

We will review an example of this for these two major areas. First, the explicit control of Tile Memory, and second, GPU-driven rendering, starting with explicit control of Tile Memory. Metal allows you to take advantage of Tile Memory directly. Tile Memory is exposed through Tile Shaders, Imageblocks as well as Persistent Threadgroup Memory. You can use this to improve the efficiency of more complex algorithms, such as tiled deferred rendering.

This is a more advanced version of deferred rendering which is considered to be more efficient for a large number of lights. This algorithm adds an extra step to cull all the lights into a tile list that is used by a later compute pass to light the scene.

So, if we start from deferred rendering, we need to add an extra compute stage to cull the lights. Potentially, we could also use compute for the light accumulation.

But this would prevent TBDR GPUs from merging the passes, because Programmable Blending only allows us to work on a single pixel at a time, and this would make light culling far too expensive.

But Tile Shading allows us to work on a full tile. By using Tile Shading, we can interleave rendering and compute. This allows us to merge the three render passes into a single pass. And of course, this is much more efficient.

But Metal also exposes the capabilities of the Apple GPUs through GPU-driven rendering. This is a fairly modern concept, possible in Metal thanks to Argument Buffers and Indirect Command Buffers. Traditionally, the render loop is driven by the CPU.

This is because the data set and the process required to traverse it are complex. We need several structures to define the entire scene. In this example, our scene contains a list of meshes, materials and models.

Each model will contain indices to a parent mesh and a material.

So far, so good. The actual real problem here is when we have to decide what to render. We just cannot render everything every frame.

The CPU will need to decide what geometry to render based on the occlusion culling from the previous frame as well as the view frustum. And of course, the CPU will also need to select the appropriate LOD.

This requires several synchronization points. The CPU needs to read occlusion data written by the GPU in order to issue the draw commands.

So there are two problems that we need to solve. First, the traversal of the scene description, and then the encoding of draw commands. Metal has the following building blocks to help. First, Argument Buffers, which make the scene data available on the GPU and also allow us to describe complex data structures.

And then we also have Indirect Command Buffers, which will allow the GPU to encode its own draw calls.

So this scene...

can be flattened to an array of Argument Buffer arrays. And these can be efficiently traversed by modern Apple GPUs.

Thanks to Argument Buffers, the GPU can now transverse the entire scene. And by using Indirect Command Buffers, the GPU can also issue draw commands. In this example, we can see a GPU-driven render loop.

This is how the GPU will manage the render loop. It will first traverse the scene to render occluders, then traverse the scene again to perform culling and the LOD selection and then render the scene one final time.

This requires no synchronization between the CPU and the GPU.

You can learn how to implement these algorithms by studying the "Modern Rendering with Metal" sample code. This sample includes a full GPU-driven render loop. It is also written using modern constructs such as Argument Buffers or Indirect Command Buffers. It also leverages modern Apple GPU features such as Tile Shaders, Imageblocks, Sparse Textures and Variable Rasterization Rate.

And this was just a summary. You may actually want to follow up on more Metal talks. Also, do not forget to check out the "Modern Rendering with Metal" sample code.

And that's about it. Today we have talked about Apple GPUs and what makes them so unique and efficient. We've also revealed some use cases in which you can use Metal in order to accelerate rendering algorithms.

So the next steps are for you to follow up on related talks about Apple GPUs and also explore the best practices for both Metal and Apple GPUs.

Thanks for watching.

-