-

Working with Metal: Advanced

Building on the fundamentals, learn how to create advanced games and graphics applications with Metal. See how to construct your rendering pipeline, understand how to use compute and graphics together, and discover how to optimize your Metal-based app.

리소스

-

비디오 검색…

Hello. Welcome to the Advanced Session on Working with Metal.

My name is Gokhan Avkarogullari. My colleagues, Aaftab Munshi and Serhat Tekin, and I will be presenting this session.

This is the third session that we have about Metal today.

In the first session, Jeremy introduced Metal to us. He talked about the motivation behind Metal, the structures that we build the Metal on, and concluded it with a demo from Crytek on collectibles with thousands of draw calls.

We followed up with the second session where we talked about fundamentals of Metal.

We talked about creating your first application with Metal, drawing an animated triangle on the screen, and followed it up with the details about the shading language.

In this session, we're going to do a more deeper dive into creating a full blown graphics application with Metal. We'll follow it up with data-parallel computing on the GPU using Metal and finally, we'll have a demo of the developer tools that we build from Metal.

Ok, so let's talk about why multi-pass applications are relevant.

The modern graphics applications are very complicated pieces of software.

They're built on using many, many advanced graphics and computer algorithms and they do them in a piecewise manner. Basically they're built on many, many hundreds of texture passes and compute passes and blip passes to generate a final great looking image on the screen.

So we're going to go and talk about a little bit how to do that using Metal.

We will talk about multiple frame buffer configurations, rendering to off-screen and on-screen textures, using meshes and different things with different states and in different configurations, and how to set up multiple encoders.

We'll use a deferred lighting with a shadow map example to basically walk through these code examples. This is a two-pass application that we built for this particular presentation. In the first pass, we have a shadow map pass where we rendered the scene from the perspective of the directional light to a depth buffer. And in the second pass we set up a G-buffer, fat G-buffer, through multiple render targets. We generate attributes in that G-buffer and we apply the point light volumes onto that G-buffer to find out which pixels are affected. And finally, using the framebuffer fetch, create light accumulation information and then merge with the albedo textures to generate a final image.

Having said that, we're not really interested in describing the example in here; it's just a vehicle for us to basically talk about the APIs. On top of that, the example is actually published as a sample code on the developer website so you can go ahead and download it and take a look at it later if you would like to. The way the example is structured is that it has two passes. Both of them are RenderCommandEncoder passes. The first one is for shadow map and the second one is for the deferred lighting pass.

They're all encoded into a single buffer which is...and the single command buffer goes into a command queue. So this is kind of what most applications will look like; multiple render texture passes going into a command encoder on a frame boundary that's sent to the command queue.

I'll give a demo of the application.

Ok, I have it here. So this is the upload of the first pass where we are rendering basically through that buffer from the perspective of the directional light.

And this is how our G-buffer is laid out. On the top left we normally have albedo texture, but we're showing basically the combined image that is going to go to the screen when we're actually done with our entire rendering. And there's a normal buffer, and a depth buffer, and a light accumulation buffer, four-color buffers basically attached to a framebuffer and depth and stencil buffers as well.

This is the light accumulation; there's also the light accumulation pass.

And these are the visualization of the lights basically using light volumes. They're mapped onto the screen.

And finally this is the final image that goes to the display. It looks a lot better on the device than on the presentation. Ok, let's go back to our presentation.

Ok, so how do we set up an application like this or more complicated ones that you have seen in the demos using Metal? Just like Jeremy talked about in the first session and looked at the things that...at what frequency things are done and moved the most heavy ones to the least frequently done stages. An application has a lot of things that are done once and there are things that are done at low-level times or streaming time, and there are things done every frame and in an application there are also things that are render to textures. So we're going to look at each category and figure out what is done in these categories and figure out how to do those with Metal.

Let's start with the things that are done once. Creating a device. They are the only ones you queue on the system so you're going to get a GPU and are handled through the GPU, and creating a command queue on the GPU is only done once in most of the applications. And Richard told you something about this; I'm not going to talk about that in this session.

There are things we do as needed. For example, we know up front in all of our applications what our render to texture stages will be. There are usually a set of render to texture passes, that are known up front for like an indoor environment or an outdoor environment and they might change if there's like wetnesses included or something like that but most of them are up front. You know as an application developer what your render to texture passes will be, in what order they're going to be done, and what kind of framebuffers that are going to be used with those. So we can actually define...create the framebuffer textures for those passes and define what those textures are going to look like up front at once.

And at level load time, we can download our assets including our meshes, textures and then basically our shaders and associate with those shaders to create pipeline objects, and the pipeline objects. And of course we can create our uniform buffers up front as well.

These are the things that we do either at level load time at once or depending on if we're going to stream load or not, at other times as well but only as needed.

In our example, we're using a single command buffer to submit a set of render to texture passes to basically have the results of our render to texture passes to show up on the screen so that's basically a frame boundary operation. Every time you want to have something to go to the screen, we're going to basically create a command buffer and use it to encode our render to texture passes so this is something we do every frame. Of course there are uniform buffers that need to be updated on frame boundaries; that's where you do it as well.

And finally the things we do every render to texture pass such as encoding the command so that GPU can understand. Setting up the states and resources, and initiating draw calls. And then finally, finishing the encoding so you can go to the next encoder.

So these are the things we do every render to texture pass and we're going to basically look at each one of them and understand how Metal is used to do these operations.

Ok, let's start with the things that we do as needed and understand how we can setup information for our render to texture passes up front so that when we create our encoders; all the information that is necessary for that encoding is ready.

But before we go there, I'd like to speak a little bit about descriptors because all of the code examples have lots of descriptors and I'd like to establish that all of the descriptor...that all of the descriptors are defining how an object is going to be created. It's like the blueprint and just like the blueprints you basically use to build a house from, once a house is built, you don't have actually a connection back to the blueprint. You cannot change the blueprint and see in fact a change on an already built house. You cannot change a descriptor and expect a change to the built object from that descriptor. Descriptors are there to define what objects are going to be, but once they're created, the connection is lost.

But just like a blueprint as well, a descriptor can be used to create more instances of the same kind of object or they can modify it a little bit to create a different kind of object that shares some of the personality of the previous object.

And as Jeremy pointed out before, everything except for a few states that you can set on the render encoders are actually built into the state objects and the resources and they are immutable, which gives us the opportunity acutely to avoid the state validation at the draw time. So a descriptor built an object and pretty much that object is immutable after that point. That basically makes the Metal a lower overhead API by avoiding all the state tracking, all the state changes, and reflecting them at the draw time.

Ok, let's go back to our framebuffer configuration, how we set up that.

Ok, so for RenderCommandEncoders, we need to know about the nature of the framebuffer, like how many color detections there are, what kind of pixel types there are, the depth and stencil attachment; things of that sort. And those are defined through a RenderPassDescriptor. We are allowed up to four color attachments that are allowed on Metal and we can attach a depth and stencil buffer as well. And the attachments themselves are also described to in other descriptor that's embedded into the RenderPassDescriptor, which is RenderPassAttachmentDescriptor. In this descriptor, we basically define what kind of load and store actions clear values and what kind of slice or mid-level that we're going to render into; all of these are basically defined here.

And it also points to the texture that we're going to use to render into.

So this pass descriptor basically has all the information necessary for a RenderCommandEncoder to get going. And we're going to look at the two passes that we have; the shadow pass and the deferred lighting pass in our example and build render pass descriptors for those.

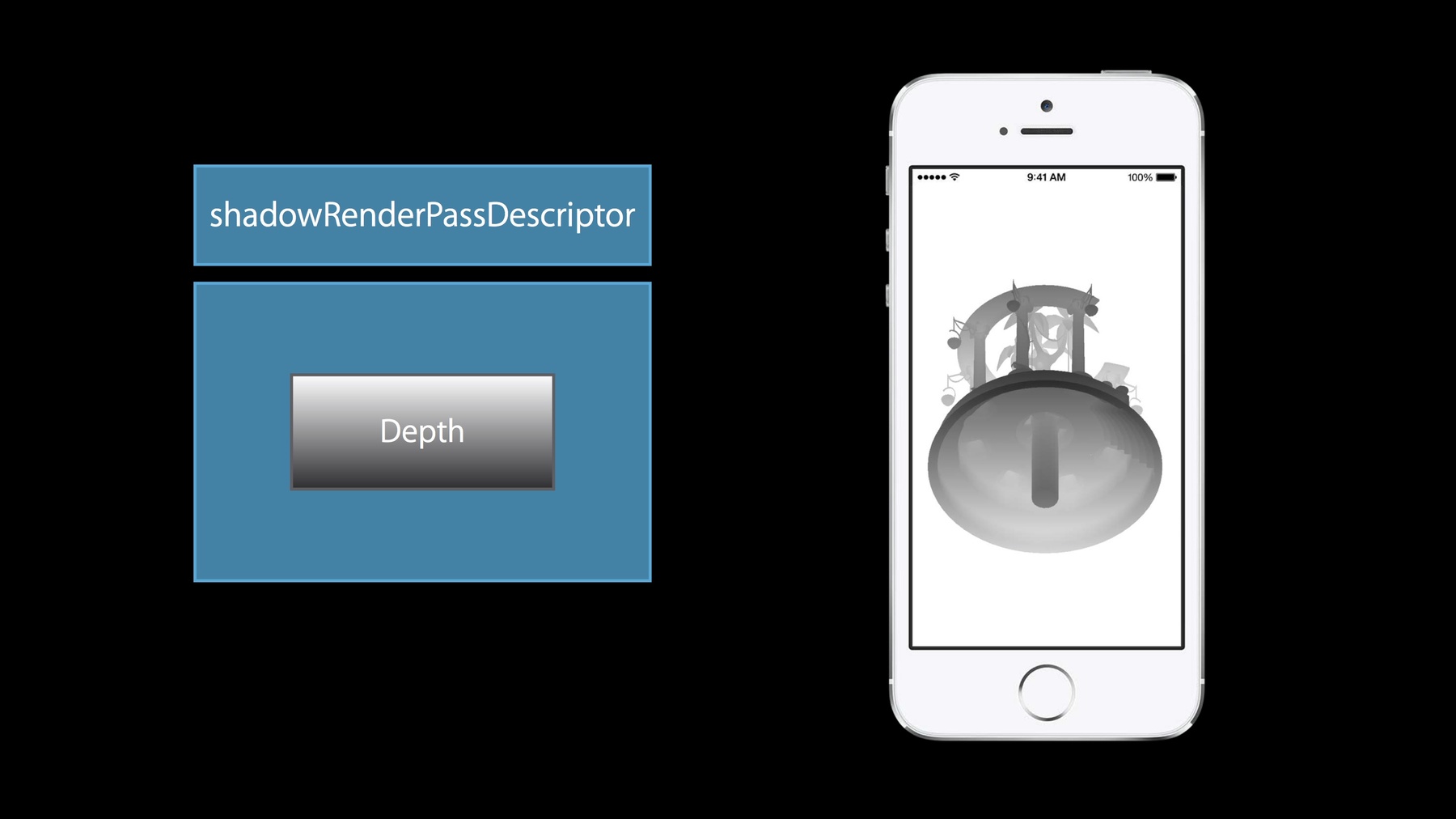

So let's start with the shadowRenderPass.

In this case we only have a depth buffer; we don't have any color buffers attached because all we want is to create a shadow map buffer that we can use later to basically see if a pixel is all clear or not.

So we're going to start with creating the texture for that and what we have in here is basically a 1k by 1k texture with no mipmapping, a depth for 32 texture.

It is created on the device as we talked extensively before. There's no concept of context or shared groups like GL on Metal.

Every resource is created on the device, they are visible by the GPU, and also you can independently modify them, not the objects themselves, but like the texture data or the buffer data, read that in a requirement of bind to modify. You can modify them any time you want as long as you're careful that they are not modified while the GPU is accessing them and as long as you make sure that memory coincident rules at the command buffer boundaries is followed. After we have our texture, we can create our render pass descriptor.

We're going to assign this texture to the depth detector of render pass descriptor because it has only a single attachment.

And then define the properties such as the clear value, load and store actions. We talked extensively about load and store actions before. They're very important in terms of performance so you should really pay attention to setting them correctly and most of the time the default values are the most sensible values. Ok, let's go to our second pass. We need to also create a descriptor for our second pass; we're going to actually do the second render command encoding. That information will be available up front. Those textures are created up front so we don't have to pay the penalty of doing those at the draw time.

In this example, we have four color buffers, one of them is actually interesting; the one on the top left. It is a buffer that we're going to render into and then go and send it to the display. So that texture we cannot create up front; we need to get it from the Metal layer, the CAMetal layer. And we talked extensively about this in the second session. Richard basically had a sample code showing how we can get that texture from the Metal layer.

The other three color textures though and the depth and stencil texture are things that we know up front. They are structures and we can create them up front and we don't have to do them later so let's go ahead and do that.

Ok, we're going to start again with a texture descriptor to define how our texture is going to look like. In this example I'm going to create only two of the color textures and then the rest of them are very similar.

So we basically need to create something that has a width and height of the displayable surface and there is no mapping required. So we create our first texture using this descriptor, texture descriptor. Now as I said before, the descriptors are kind of blueprints so we can actually use the same descriptor and create the second texture out of that as well. This is what we're going to do as well in here. Basically we modify the descriptor to change the pixel format and everything like width, height, the mipmapping properties were the same so we didn't have to modify those, and then create the second texture with that. So you can see that we basically have one descriptor creating two different textures.

So now that...and we're going to do this for the third color attachment, depth and stencil. Now that we have done all of those, we can go ahead and create our RenderPassDescriptor so that we can use it later to create our RenderCommandEncoder.

We first create it.

What is interesting in here as I explained before: the first color attachment we actually don't have a texture up front ready for it. We'll have it when we actually start setting up our frame.

So it's set to "no" initially and eventually we'll get it from the drawable when we create our RenderCommandEncoder.

But despite not having a texture, we know actually the actions up front so we can define the clear value, load and store actions on the attachment descriptor up front.

And we can go ahead and do it for the second color attachment. As you can see in this one, I used the texture that we created in the previous slide as an attachment.

And we can also define the clear value, load and store actions up front over here.

I'd like to point out the difference between the store actions between these two; for the first buffer attachment, we'd like it to end up on the screen so we'd like it to be stored into the memory.

But the second, third, and fourth, color attachments, depth and stencil buffers, they can be discarded; they're just intermediate values that we used to generate the first attachment's values. So we don't really need to store them into memory; we don't want to lose memory bandwidth and GP cycles for that expensive operation so we set them as "don't care" and let the driver figure out the best kind of operation for the particular GP that you're working on.

Ok, so that covers how we create render pass descriptors that we want and the textures associated with the framebuffers that we are going to use later for creating render command encoders when we do render to texture passes.

Let's look at now the textures, buffers, and state objects, and specifically the pipeline state objects that we're going to create at level load time so that we don't have to basically deal with them later.

We've already seen how to create textures from texture descriptors; I am not going to go into details of that. I just want to point out that there are multiple ways to upload data into your texture.

This is one of them: basically a CPU copy.

And you can also use boot command encoder to upload data inline through the GPU to do that as well.

We talked about buffers before. We don't have a concept of a vertex buffer or a uniform buffer. Buffers are buffers; they are raw data. You can create them for anything you want and then reach them...you know, access them through a GPU in your shaders when you specify them as inputs. All we're doing here is creating one with the information about, you know, the size and the options.

And as I said before, you can modify these really easily while your GPU is working on, for example another section of your buffer, you can modify this section of buffer. You need to take care of the synchronization and you need to follow the memory coincident rules, but you don't have to do anything like locking the buffer or getting the pointer to do...both pointers are always available to you. You have the freedom to modify however you want whenever you want and as long as you basically pay attention to GPU and CPU synchronization issues, it will work correctly.

And finally one other example of a depth stencil state, I showed this one; I don't have much to say about it except that it is an interesting one that it has an embedded descriptor, internal descriptor. Basically that stencil descriptor has a stencil descriptor defined within it. But you can create all of your depth stencil states up front because just like you know your render to texture state, you actually know how your objects are going to be rendered into those textures. You know that, for example in the shadow map pass, you don't really need to write into stencil buffer, so you can basically say that information here or you don't use that. Or when you have something that is opaque, you can basically update the depth buffer, but if you're going to do something that is blended for example, you might choose to not update the depth buffer and then you can actually, if you know all the sates up front, you can actually create them up front and don't pay the penalty during the draw time.

Ok so we're going to talk about next the render pipeline state objects but before we go there, I'd like to revisit the kind of the OpenGL view of the GPU.

And then go and basically talk about what's the motivation of RenderPipelineState objects and what is included in them.

So this is kind of how it looks to basically, in general, OpenGL, but there's actually a separate vertex shader state and a fragment shader state and all the other operations that are all that looks like fixed function hardware-controlled through basically state-changing APIs. Unfortunately the modern hardware doesn't really work that way.

If you think about it, for example, when your vertex layout doesn't match your vertex shader because they're separate, the meshes are all altered separately than your vertex shaders, there has to be some code running somewhere; either a de-imaging code or a vertex fetch shader code that needs to bridge that gap between these 2 layout differences.

Or in some GPUs we have tile-based deferred render so most of the frame buffer actually is on the GPU while you're working on it, so the blending or write mask is basically a read, modify, write operation on the tile. And we do that by creating actually a shader code running on the shader core; it's not really a state. So when you actually make a draw call with one blending mode for compiled shaders and then change your blend state and make another draw call with the same compiled shaders. Well we find out that we actually have to generate new code for this new framebuffer configuration because we have never seen it before and we're going to do this fixed-function looking-like operation in the shader core and we end up recompiling your shader causing a hitch in your application.

So recognizing the differences between how the API looks on OpenGL and how actually a GPU behaves, we decided to basically put everything in the GPU that closes shader code and has an impact on the shader code in one place that's called "render pipeline state object." So let's look at what we have in it.

We basically have the vertex fetch basically information about your vertex layout that we talked about before. I actually gave a good example of it. Aaftab gave a good example of it in his talk about language.

Obviously, we have the shaders included in it. We have framebuffer configurations like number of the rendered targets, the pixel format, sample count, write mask, blend information, actually, and depth and stencil state.

Now we didn't go all the way to including everything in the GPU state in the render pipeline state object because if we had done that, you would be creating millions and millions of them and actually there would be only a few unique ones of these around. So basically anything that is...that can easily be updated or that's really truly fixed function hardware is not in the render pipeline state object such as the inputs. We need to know the layout of the buffer for example, but we don't really need to know which particular buffer you're using. It's really easy to update the pointer pointing to the buffer and the hardware.

The same goes true for buffers as outputs or the framebuffer textures. We need to know how they're configured, but we don't need to know specifically which ones are used at any time. Or the primitive setup states like the cull mode, facing orientation, or information like viewport and scissor information, depth bias and clamp and slope. So those are not included in your render pipeline state objects, but everything that affects your cost is included in your pipeline state object so everything you create is explicit and everything you create is basically you pay the cost up front. There are no hidden codes, there are no deferred state validation, no later compilation in Metal. So what you do, you know you do it, you're doing it, why you're doing it, and the cost is paid up front when you are actually doing it.

So let's go and create a few of those.

Basically there's a render pipeline sate object associated with every draw call. And in our example, for example under rendering the mesh, the mesh for the temple, we're going to render it twice; one we're creating the depth buffer for the shadowMap and then one; we are basically doing light accumulation...deferred lighting pass. So we're going to create two of them; I'm going to give examples of how to create them.

We create a new one, a descriptor. As we talked before, you hopefully basically compiled your shaders on Xcode on the host-side or in a library so we can go and get your vertex shader from the library.

And now I'm basically setting the rest of the sates here; basically we're setting the vertex function, we're setting depthWriteEnabled to "true" because you actually want to update that buffer.

Interestingly since there's no color buffer attached, you can set your fragment shader to "no." There is not really going to be a fragment shader operation going on for this configuration for this drawing. And once you have that information, you can use that descriptor to create our render pipeline state object for our temple mesh for our shadowMap pass.

Let's do the same thing for the deferred lighting pass. Well the deferred lighting pass is a little bit more rich in terms of the stream buffer configuration and terms of the actions that are taking place. So we're going to have both the vertex and fragment shader and we're going to define the pixel formats for all attachments.

So we have now basically defined our render pass descriptors. We created our buffers for meshes, for uniforms; we created our textures and uploaded them; we created our render pipeline state objects.Well I think we can now get into the business of drawing things on the screen.

So...well Richard did a great job in explaining how the command buffers are formed and used, so I'm going to, you know, visit it for just a bit to remind you. So we have a single command queue that is our channel to the GPU and we get a commandBuffer from the commandQueue and that's how we do it: ask the commandQueue to give us a new command buffer. And then once we basically go ahead and encode all our render to texture passes, we're going to be ready to send it to the GPU so that's how we do it, by issuing a commit call. And then finally, commandBuffers are not reused. Once they're used, we get rid of them and then the next step, next frame, we're going to get a new one so we set it to "new" and that takes care of it.

One thing I like to point out just as Richard did in the previous session: if you like to get the results of your command buffer's operations to show up on the screen, you need to schedule the operation before committing your command buffer so that you basically call the addPresent API to schedule that so when the command buffer is executed by the GPU, the display will basically know about it and it will show your image on the display.

Ok, now is an exciting part: creating the command encoders and actually encoding stuff. Well, it is exciting, but there's actually not much left to do at this point because you have done everything up front.

So all we're going to do is create an encoder from the commandBuffer this time. There can only be one command encoder active at any time on a commandBuffer. There's one exception to this: this is a parallel RenderCommandEncoder. I'd like you to take a look at the programming guide to understand when it's used. It's mostly when you have a huge render to texture pass and you want to basically paralyze operations on multiple CPUs; that's the only time you're going to use it.

But for most applications, there will be one render encoder, RenderCommandEncoder or a blip command encoder or a compute command encoder active at any time.

So we created one in here; it's a RenderCommandEncoder for our shadowMap pass and we create it using the shadowMap pass descriptor that we created just a few minutes ago. It has all the information about the depth attachment and the structure of the depth attachment and how it's defined and all the information necessary for creating this encoder. And then what we do is just set the sates; they're all baked before so we don't have to validate them.

And we set the buffers as inputs for texture samples as inputs to our draw calls, and then finally issue our draw calls. And then repeat this hopefully thousands and thousands of times and in each one you will basically be using a different kind of state. But the driver will be able to just go ahead and fetch them from a pre-baked place instead of creating them on the fly or validating them on the fly, which makes Metal significantly faster than the alternative app. And once you're done, you finish your encoding by calling end encoding.

And at this point, the commands are just created in the command buffer, but they're not really submitted to the GPU. They cannot be executed on the GPU yet because that's only done when you submit your command buffer, not when you finish your encoding. Let's go create the encoding for the second pass because it has an interesting feature that we couldn't finish completing our vendor pass descriptor early enough because we didn't have the texture for our drawable at that point.

So just in the previous session if you saw that how we can get that texture, if you remember doing that, and then assigning it our first color attachment to the texture point of our first color attachment.

The rest of the descriptor was well defined before; we knew what textures to use and how many textures there were and so we don't actually need to modify that part. We're just going to basically update this part of the descriptor and are going to use that one to create our RenderCommandEncoder for our deferred lighting pass.

We do the usual, just issue draw calls and finally end up finishing our draw calls and closing our encoder by "End encoding". And at this point for this particular application since you have only two passes, we just call the command buffer commit and it will go to the GPU, and the GPU will start executing the commands we encoded just now.

Ok, so that's all I wanted to talk about; how to structure your application to take advantage of Metal and we're going to go into a really, really interesting and new feature that we have on iOS: data-parallel computing on the GPU with Metal so Aaftab will talk about that. Thank you.

Thanks Gokhan.

All right, I'm back. All right, so I have 15 minutes to talk about data-parallel computing so I want to focus on the key points I wanted to get across. So we'll first talk about...and give a very high level overview of what is data-parallel computing, how Metal does data-parallel computing, and then we'll look at how to write kernels in metal and how to execute them. And we'll actually take an example, a post-processing example, and show how you do that.

All right, so let's start. So what is data-parallel computing? So imagine if you have a piece of code, let's say a function, that we can execute all elements of your data; so maybe elements in an array or pixels of an image.

And these computations are independent to each other. So that means if I have, you know, multiple threads of execution, I can run them all in parallel so that's what I mean by data-parallel computing. So this is actually a very simple form of data-parallel computing and I'll talk a little bit more about additional things you can do in Metal with data-parallel computing. So a classic example of this data-parallel computing is blurring an image, right? So for example you have an image. For each pixel you're looking at a nearby region and you are applying a filter. Well, I could execute these in parallel. So if I had let's say a 1k by 1k image and I had a million threads, I could execute each pixel in parallel, right? So how does that work in Metal? So the code that you're going to execute in parallel, we call that a kernel.

And the thing that actually executes independently, we call that a work-item. So on a GPU, a thread may actually contain multiple work-items because now a thread actually may execute multiple such things in a SIMD fashion and then you have multiple threads. So the work-item is the thing that identifies each independent execution instance. So you're going stop me right there and say, "Hey, that looks just like a fragment shader," right? Because that's what fragment shaders do, right? You execute the shader in parallel or multiple fragments; they don't talk to each other. Like they are in their own world. They take inputs; produce outputs. So what is this data-parallelism? So that is indeed; we call it SIMD parallelism and that is a data-parallelism model supported in Metal. And the only benefit here is that you no longer have to create a graphics pipeline; you don't specify a vertex shader and a fragment shader and state and things like that. You just say, "Here is my function, here is my problem size, go execute this function over there." But there's more to it than that because, in data-parallelism, you can actually tell a number of algorithms where you actually want these work-items to communicate with each other. Let's take an example; let's say I want to -- a very simple example -- I want to sum elements of an array. It's referred to as a classic problem of reduction. So in that case, you now we have these work-items generating partial results and you keep looking over until you finally have you know two partial sums and then you get the final sum. But in order to make this work, these work-items need to know the results generated by other work-items, so they need to be able to talk and you know talk is always good. So what you can do in Metal is you can say, "Hey, these work-items, they work together," ok? And we call that a work-group.

And these work-items in the work-group can actually share data through what we call local memory. Remember we talked in the previous session about global and constant and so, for kernels, you get an additional high-bandwidth, low-latency memory we call "local memory." Think of it just as a user-managed cache. that's all it is. And then they can synchronize because now when these threads want...or work-items want to communicate, they need to make sure they arrive at a place together so they can exchange data. So you get all of that with data-parallelism in Metal. So let's...

Ok? So I wrote my code that can do all of this, but how do I describe my problem? So the thing is we called it a computation domain so depending on what thing you're going to execute this function over, if it's an array, your problem is one-dimensional. If it's an image, your problem is two-dimensional.

So I've specified the dimensions, but what else do I need to specify? Well, now I need to tell you how many work items are in my work-group and then how many work-groups do I have? So that describes the number of work-items in the work-group times the number of work-groups that describes the total problem that will be executed in parallel. So choose the dimensions that are best for your algorithm. So if you're doing an image processing, a post-processing effect, you know you're going to operate on textures. Use 2D. Yeah, you could do it in 1D, but who wants to do all that work to turn that 2D to 1D? And you shouldn't have to. And the work-items you have in your work-group, how many they are, it's going to depend on the algorithm you're using. It's going to depend on how many load store operations you're doing and how much computations: a ratio of memory to computations and so play with that. Choose the right dimensions.

You have the flexibility because you can specify these.

All right, so let's take an example. We'll look at how to take pseudo-code C, C++ code, and make it into shaders. We'll look at how we do that for calls and we're going to take a really, really simple example. So this function takes an input, squares the result for each element, and writes it out. And remember ah, it has the...the function is not complete.

So we include the standard library just like we did for shaders and we use the Metal namespace. So remember, we're going to execute this function for each index in parallel, but first, I need to tell the compiler that this is a data-parallel function so that's what the kernel is going to do; that's the qualifier. All right, I'm passing pointers so I need to specify these buffers, what indices they're going to be in and that they're in the global address space. Remember we talked in the previous session about address spaces? So these are coming in global and then I need to tell the compiler that this ID to I'm going to use to index is unique for each instance and so in this case, I call that a "global ID," ok? So we have a vertex ID and compute. You have global IDs, ok? So pretty simple, pretty straightforward. Well, let's say if I'm using textures, how would I write a kernel? So let's say I'm trying to just mirror an image horizontally, so this was my image and I want to...remember what I said, "Use the right dimensions?" So since I'm operating on an image, I really want my global ID to be two-dimensional in nature and that's what I'm doing here. And I want to read from my input texture after I have mirrored the coordinate and then I want to write it out. So you're going to find writing kernels, just like writing shaders, it's really easy. Its C++ code; it's standard with information so that a compiler knows what you are trying to do. Ok, remember we talked about built-in variables? You had a special attribute to say position, front-facing, and things like that for graphics? Well, we have something similar for kernels too.

Well, global ID; you guys already know it is now, right? So in this case, my example, I'm actually using...I'm operating on a texture so my global ID is two-dimensional. So it can be a ushort2 or uint2 so use the right dimensions you want. Now so that's great if I'm only operating on textures in my kernel, but what if I'm passing in buffers too? So when I access my texture, I'm going to use my two-dimensional global ID, but if I access a buffer, I need to create a one-dimensional ID. So we're trying to help you not have to write all that code because there may be bugs; we fixed that for you. So that's the global linear ID. So now remember we said, "Work-items work in a work-group?" So within that work-group, I need to know which instance I am. So I have global ID; we call this the "local id." And just like you can have a two-dimensional local ID, there's also a one-dimensional.

We call that that the "local linear ID." So you just specify these attributes for the arguments to your kernel function and the compiler will generate the right code. And finally we call this the "work-group ID." And so why do I care about this? Well, remember you specify the number of work-items in the work-group and number of work-groups? So let's take...for an example, I'm generating a histogram. So each work-group...one way you could do this is each work-group could generate a partial histogram, so a histogram for the things it's working on. And then you will run another kernel that could sum these partial histograms and generate a final histogram.

Well, so that means I need to write the results of these partial histograms to some buffer, right? Well I don't want to create a buffer for each work-group so I just want to allocate one buffer so guess what index would I use to write? That would be my work-group ID so that's how you would use it, ok? All right, so let's see how to execute kernels. What commands do I encode to send work to the GPU to execute a kernel? And we're going to take a post-processing example so this is going to do some highlights, shadows, vignette effects and things like that and I'll actually demo that for you guys. So let's look at the source. I'm not going to give the details of the source; just give a very high-level picture of what's happening. So it takes a number of images and some information. It's going to take the global ID and transform that using some matrix; an affine matrix. It's going to sample from an image and then apply a post-processing effect, and then write to an image, ok? And then you're going to say, "That looks just like a fragment shader." Well in this case, yes it does.

But remember, the number of work-items times number of work-groups make up the total problem domain you're going to operate on. There is no such requirement that each work-item must only operate on only one pixel; it could actually operate on many pixels. And depending on what you are doing in your code, again that computation to memory ratio, you may find its better to do more work than less work.

So when we do this, we actually modify this kernel. So we had a crack team working on it and what they did is they changed it to four pixels per work-item.

It's like...it was actually faster. And so we like faster, don't we? So that's what it's doing; it's for...so and so in fact each work-group is doing the work of four work-groups. So we don't need to look at this code, but it's straightforward. It's going to loop over these things that the compiler is going to unroll. But the point here is that there is no requirement of one-to-one mapping; you can have one-to-end mapping. And so you have a lot of flexibility, ok? And that can actually help...really help you tune your code; your data-parallel function that you want to execute on the GPU.

All right, so let's talk about the compute command encoder. So this is what you need to send commands to execute kernels on the GPU. So just like the RenderCommandEncoder for compute, we have this compute command encoder but first things first. Remember I compiled my kernel and so I have created a Metal library. And so I'm going to load that and I'm going to load that kernel.

The next thing I'm going to do is I'm going to create my compute state. Just like we had the render pipeline state, there's the compute pipeline state. And all it takes in this case is just the function.

The compute doesn't have a lot of states.

So at this point in time, remember in the Metal library the information we compiled from your source to Metal IR, an LLVM-based IR, we're going to take that and when you create a new compute pipeline state, we're going to compile it to the actual GPU binary that the GPU is going to execute, ok? I'm going to create my compute command encoder.

I'm ready to send commands now.

So the first thing I'm going to do is set my compute state. So then I'm going to set my resources my kernel uses; remember the buffers and textures.

And then I'm going to execute "ready to encode the command" to execute the kernel. So in order to do that, I need to first set the number work-items in the work-group, and how many work-groups there are. So in this example, I use a 16 by 16. Remember they are two-dimensional problems; I'm going to give it two-dimensional work-group size. And so I know the output image dimensions, I know my work-group size, so I'm going to know...calculate how many work-groups there are going to be.

And now, I encode the command to execute the kernel. So two things I specify: the work-group size and how many work-groups there are, ok? And then I'm done encoding.

So at this point in time, commands have been encoded but the GPU hasn't received them. So just like we talked about sending commands, you have to commit the command buffer and when you do that, the GPU will start executing this kernel, ok? So let me show you a demo of these filtering operations.

All right, so that's applying and we know the fact that we can do shadows. Ok, so that's the highlights and shadows filter, but I can actually do a tune filter. And so this is actually...what it's doing is its taking your input image and applying...converting it into a what we'll call the LAB space which approximates human vision, then does a bilateral filter, and then actually does the tune filter, and then converts it back to RGB. Or let's say I want to sharpen it so I want to apply a sharpen filter.

So you can see we adjusted the image, it's getting sharpened. Hopefully you do see regions of the image getting sharpened, ok? So these are some of the filters and these are really, really easy to write. Because in effect what you're doing now is you're just writing your code, your algorithm, just as a set of functions. And saying, "Execute this function or this problem domain." Really, really simple to think about. So focus more on the algorithm and that's what data-parallel computing allows you to do, ok? So that's all I could...that's all I'm going to say about data-parallel computing and next, we're going to have Serhat come and show you the amazing tools we have and show you how you can debug profile Metal applications in Xcode, so Serhat. Well, thank you Aaftab and hello everyone.

Now I'm going to show off the great developer tools we have in Xcode for your Metal apps.

We'll be focusing on some combinations such as state setup, shader compilation, and performance, and how you can use the tools to address these issues.

So I have the same app that Gokhan showed you earlier here. The shadow buffer pass has been modified to use percentage closer filtering for softer shadows, but my app has a few issues that I'm going to fix live.

So let's go ahead and run it. Ok as you can see, I've got some compilers and Xcode is pointing right here at my G-buffer shader code. So one of the great things with Metal is its support for pre-compiled shaders right here in Xcode.

So in addition to the improved initialization time for my app and other benefits, I also get to see the compilers and warnings right here in Xcode at build time as opposed to run time.

Let's go in and fix this real quick.

Let's define user variables and launch the app. Now you can imagine this offers a much more productive workflow for shader development.

Also using it is pretty easy, all you have to do is you add your .mil shader files, your Xcode project, and Xcode takes care of the rest. Ok so my app is running now, but there's something wrong with what I'm rendering.

Now I'm going to take a frame capture and bring up the frame developer so that you can see the issue as well and to do that, I'm going to click on this camera icon here in the Debug bar and trigger a capture.

When I trigger a capture, the frame debugger harvests all the Metal commands that your application uses in the frame along with all the other resources that it uses as buffers, textures and shaders. With these we can reconstruct the frame and you can replay to any particular Metal command that we choose.

So this is what I was seeing and you can see my scene is completely in shadow and I'm missing my directional light.

So let's use the frame debugger to figure out the problem.

Well the first thing I want to do is I want to check my commands and make sure everything is in order.

For that, I'm going to use the debug navigator here on the left-hand side of the Xcode window.

This is where the debugger shows the old Metal commands that were executing in the frame.

You can see that it also reflects the natural hierarchy of your command encoders within your command buffers that's making it a lot easier for you to navigate your frame.

Well one thing to keep in mind here though is, the order you see here, is the order that the GPU executes these commands. So it's a bit different than CPU debugging. You can also see that most of my objects here have human-readable names, not just draw pointer values.

Metal class has a label property that lets you set this and I highly recommend taking advantage of it as it's a great aid in debugging.

Within the encoders, I can further annotate my command stream using debug groups shown in the navigator as these folder icons.

Well, I can do this by using the push and pop debug group APIs and the debug groups are great for bracketing related commands together here in the navigator.

Now let's investigate the G-buffer pass and figure out what's wrong with the shadows. I'm going to click on this structure debug group to navigate there; there you go. And as I navigate my frame, Xcode's main editor area is going to update and show me all the attachments for the current framebuffer.

The assistant editor is where I see all my textures, buffers and functions.

Currently, it's in bound GPU objects so it's only going to show me the objects that are bound to the current command encoder.

All right, so I can see that my debuffer attachments look more or less correct except for the color.

But if I look at the assistant, I see that my shadow texture bound to my fragment shader, here labeled as "shadow", is all black.

So that explains why my scene was completely in shadow, but it also means that my shadow buffer passes aren't working properly, so let's just go there and investigate.

So I'm going to just expand this and click on the encoder itself.

Now looking at this I can see right away that the depth attachment for this pass isn't cleared when it starts.

As Gokhan explained earlier, you control your framebuffer attachment clears with the load action property of the attachment.

Now not only that, but this don't look like it was rendered from the point of light either but if I step through my frame to see my geometry, that looks proper.

So let's look at the framebuffer state and figure out what the problem is there.

I can investigate this state of "any Metal object using the variables view," here at the bottom. I'll expand that a little bit . On the right-hand side, I have a list of all my Metal objects. But I like to use the Auto View you see on the left. This is where you see all the relevant objects and their state for the current selected command only.

So I know what I'm looking for.

My framebuffer state is going to be under the RenderCommandEncoder so I'm going to disclose that and search for framebuffer. And yeah, as I suspected, my load action is not set to clear. And not only that, the attached texture here is actually depth; the texture I was looking at earlier was labeled "shadow." Now if I disclose my framebuffer setup that I prepared earlier, I can see that I already have a shadow frame buffer but I'm using debug framebuffer here. So that explains why I was getting a blank shadow buffer texture. I can quickly fix this by using the debug navigator to take me straight to the problem.

And I can do that by going back to the navigator.

Now you setup your framebuffer when you're creating your RenderCommandEncoder. I can disclose the back-trace where I create the RenderCommandEncoder and use that to jump straight to the source.

So let me go ahead and fix this real quick.

It should be shadow. I'd like to stop my app and re-launch it and make sure everything is running properly.

All right, so my scene looks great now but looking at the frames per second tray here, I can see that I'm not really getting the performance I expect. I'm going to click on this tray to bring up the graphics performance report.

Now I'm getting about 35-ish frames per second; I was getting 60 earlier.

Now looking at the report, I can tell my GPU is heavily utilized with most of the work coming from the render or the fragment shader stage.

Ok, to get a more detailed picture, let's take another frame capture and see what the Shader Profiler has to say about it. And the Shader Profiler is at Sampling Profiler. That's going to automatically and repeatedly run all my shaders in my frame for sampling.

This may take a bit longer for a more complicated scene, but that small amount of time is well worth the investment as it offers an in-depth performance analysis that I otherwise wouldn't be able to get.

Ok, when the Shader Profiler is done, I'm going to get this program performance table here in the report. In this table, I get a breakdown of the performance cost of all my render pipelines in the frame along with all the draw calls that use these pipelines. I can disclose this so you see that.

Another great way to look at this is to again go back to the navigator and switch it to use the "View Frame by Program" mode.

Now the nice thing about this is that I can both navigate my frame and see my shader profiler and results at the same time.

So let's look at the most expensive draw call and the most expensive pipeline real quick. I'm going to hide my draw call highlight so that I can see my attachments better. And in the Assistant, I'm going to bring up that costly fragment shader, so that I can look at this for us, make some more room here.

Oops.

Ok, now as I look at my code, I can see line-by-line profiling data right alongside my code here in the editor. And if I keep scrolling down, I see that the most expensive parts of my shader by far is where I'm doing the shadow sampling loop.

So this many samples is an overkill. Let's reduce it to just a 2 by 2 grid and Save: Command+S. Now when I do that, my shader is going to get recompiled and my changes will take effect immediately.

And once the shader profiler is done analyzing, I'm going to get updated performance numbers as well.

And I can see that my attachments look more or less unaffected; that's good. And now I'm spending far less time in the expensive part of my shader source and that's great. And if I scroll all the way to the end, this is my end result.

Now I can go ahead and resume my app and I see a nice, smooth 60 frames per second. Excellent.

With that, my work here is done and it's time to leave the stage back to Aaftab. Thank you.

All right, so let's summarize, ok? So Gokhan, you know, took you deeper into how Metal works. You know we talked about how you structure the application. The descriptors and state objects; how do you create them and when to do what, like for example: things you want to do once, like creating your command queue.

Things you need to create whenever you need to, like resources, and things you must create every frame and every pass, right? And he showed you how you can do multi-pass encoding with Metal.

Then I gave you a small, brief teaser into data-parallel computing, ok? And then finally we showed you the amazing tools that we have in Metal that you can use to write amazing applications.

That was it. So for more information, any questions, you know, Philip Iliescu and Allan Schaffer are evangelists. You know their emails are there.

And documentation is on our website. You know you can post questions, emails on the developer forums.

The first two sessions that happened this morning, so these are the ones.

Thank you guys.

[ Applause ]

-