-

iOS Memory Deep Dive

Discover how memory graphs can be used to get a close up look at what is contributing to an app's memory footprint. Understand the true memory cost of an image. Learn some tips and tricks for reducing the memory footprint of an app.

리소스

관련 비디오

WWDC23

WWDC21

WWDC18

-

비디오 검색…

Hi, everyone. My name's Kyle. I'm a software engineer at Apple and today we'd like to take a deep dive into iOS memory.

Now, just as a quick note, even though this is focused on iOS, a lot of what we're covering will apply to other platforms as well.

So the first thing we'd want to talk about is, why reduce memory? And when we want to reduce memory, really, we're talking about reducing our memory footprint. So we'll talk about that.

We have some tools for how to profile a memory footprint.

We have some special notes on images, optimizing when in the background. And then, we'll wrap it all up with a nice demo.

So why reduce memory? The easy answer is users have a better experience. Not only will your app launch faster. The system will perform better.

Your app will stay in memory longer. Other apps will stay in memory longer. Pretty much everything's better.

Now, if you look to your left and look to your right, you're actually helping those developers out as well by reducing your memory.

Now, we're talking about reducing memory, but really, it's the memory footprint. Not all memory is created equal. What do I mean by that? Well, we need to talk about pages. Not that type of pages.

We're talking about pages of memory. Now, a memory page is given to you by the system, and it can hold multiple objects on the heap. And some objects can actually span multiple pages. They're typically 16K in size, and they can come in clean or dirty.

The memory use of your app is actually the number of pages multiplied by the page size.

So as an example of clean and dirty pages, let's say I allocate an array of 20,000 integers. The system may give me six pages.

Now, these pages are clean when I allocate them. However, when I start writing to the data buffers, for example, if I write to the first place in this array, that page has become dirty.

Similarly, if I write to the last page, that, or the last place in the buffer, the last page becomes dirty as well.

Note that the four pages in between are still clean because the app has not written to them yet. Another interesting thing to talk about is memory-mapped files. Now, this is files that are on disk but loaded in the memory.

Now, if you use read-only files, these are always going to be clean pages.

The kernel actually manages when they come in and off of disk into RAM.

So a good example of this would be a JPEG. If I have a JPEG that's, say, 50 kilobytes of size, when it's memory mapped in, that actually is mapped into four pages of memory, give or take. Now, the fourth page is actually not completely full, so it can be used for other things. Memory's a little bit tricky like that. But those three pages before will always be purgeable by the system. And when we talk about a typical app, their footprint and profile has a dirty, a compressed, and a clean segment of memory.

Let's break these down.

So clean memory is data that can be paged out. Now, these are the memory-mapped files we just talked about. Could be images, data Blobs, training models. They can also be frameworks.

So every framework has a DATA CONST section.

Now, this is typically clean, but if you do any runtime shenanigans like swizzling, that can actually make it dirty. Dirty memory is any memory that has been written to by your app.

Now, these can be objects, anything that has been malloced -- strings, arrays, et cetera.

It can be decoded image buffers, which we'll talk about in a bit. And it can also be frameworks.

Frameworks have a data section and a data dirty section as well.

Now, those are always going to count towards dirty memory.

And if you might have noticed, I brought up frameworks twice. Yes, frameworks that you link actually use memory and dirty memory. Now, this is just a necessary part of linking frameworks, but if you maintain your own framework, singletons and global initializers are a great way to reduce the amount of dirty memory they use because a singleton's always going to be in memory after it's been created, and these initializers are also run whenever the framework is linked or the class is loaded.

Now, compressed memory is pretty cool. iOS doesn't have a traditional disk swap system.

Instead, it uses a memory compressor. This was introduced in iOS 7.

Now, a memory compressor or the memory compressor will take unaccessed pages and squeeze them down, which can actually create more space.

But on access, the compressor will then decompress them so the memory can be read.

Let's look at an example.

Say I have a dictionary that I'm using for caching. Now, it uses up three pages of memory right now, but if I haven't accessed this in a while and it needs to, the system needs some space, it can actually squeeze it down into one page. Now, this is now compressed, but I'm actually saving space or I've got two extra pages.

So if, some point in the future, I access it, it will grow back.

So let's talk about memory warnings for a second.

The app is not always the cause of a memory warning.

So if you're on a low-memory device and you get a phone call, that could trigger a memory warning, and you're out. So don't necessarily assume that a memory warning is your cause.

So this compressor complicates freeing memory because, depending on what it's compressed, you can actually use more memory than before. So instead, we recommend policy changes, such as maybe not caching anything for a little bit or kind of throttling some of the background work when a memory warning occurs.

Now, some of us may have this in our apps.

We get a memory warning, and we decide to remove all objects from our cache. Going back to that example of the compressed dictionary, what's going to happen? Well, since I'm now accessing that dictionary, I'm now using up more pages than I was before.

This is not what we want to do in a memory-constrained environment.

And because I'm removing all the objects, I'm doing a lot of work just to get it back down to one page, which is what it was when it was compressed.

So we really got to be careful about memory warnings in general.

Now, this brings up an important point about caching.

When we cache, we are really trying to save the CPU from doing repeated work, but if we cache too much, we're going to use up all of our memory, and that can have problems with the system.

So try and remember that there's a memory compressor and cache, you know, get that balance just right on what to cache and what to kind of recompute.

One other note is that if you use an NSCache instead of a dictionary, that's a threat-safe way to store cached objects. And because of the way NSCache allocates its memory, it's actually purgeable, so it works even better in a memory-constrained environment. Going back to our typical app with those three sections, when we talk about the app's footprint, we're really talking about the dirty and compressed segments. Clean memory doesn't really count.

Now, every app has a footprint limit.

Now, this limit's fairly high for an app, but keep in mind that, depending on the device, your limit will change. So you won't be able to use as much memory on a 1-gigabyte device as you would on a 4-gigabyte device. Now, there's also extensions. Extensions have a much lower footprint, so you really need to be even more mindful about that when you are using an extension.

When you exceed the footprint, you will get a exception.

Now, these exceptions are the EXC RESOURCE EXCEPTION.

So what I'd like to do now is invite up James to talk about how we can profile our footprint.

Thanks, James. Thank you.

Thanks, Kyle. All right. I'm James. I'm a software engineer here at Apple. And I'd like to introduce some of the more advanced tools we have for profiling and investigating your application's footprint.

You're all probably already familiar with the Xcode memory gauge. It shows up right here in the debug navigator, and it's a great way for quickly seeing the memory footprint of your app. In Xcode 10, it now shows you the value that the system grades you against, so don't be too concerned if this looks different from Xcode 9.

So I was running my app in Xcode, and I saw that it was consuming more memory.

What tool should I reach for next? Well, Instruments, obviously.

This provides a number of ways to investigate your app's footprint.

You're probably already familiar with Allocations and Leaks. Allocations profiles the heap allocations made by your app, and Leaks will check for memory leaks in a process over time. But you might not be so familiar with the VM Tracker and the Virtual Memory Trace. If you remember back to when Kyle was talking about the primary classes of memory in iOS, he was, he talked about dirty and compressed memory. Well, the VM Tracker provides a great way to profile this.

It has separate tracks for dirty and swapped, which, in iOS, is compressed memory, and tells you some information about the resident size.

I find this really useful for investigating the dirty memory size of my app. Finally, in Instruments is the VM Memory Trace.

This provides a deep view into the virtual memory system's performance with regards to your app.

I find the By Operation tab really useful here.

It gives you a virtual memory system profile and will show you things like page cache hits and page zero fills for the VM.

Kyle mentioned earlier that if you approach the memory limit of the device, you'll receive an EXC resource exception. Well, if you're running your app now in Xcode 10, Xcode will catch this exception and pause your app for you. This means you can start the memory debugger and begin your investigation right from there. I think this is really, really useful. The memory debugger for Xcode was shipped in Xcode 8, and it helps you track down object dependencies, cycles, and leaks. And in Xcode 10, it's been updated with this great new layout. It's so good for viewing really large Memgraphs.

Under the hood, Xcode uses the Memgraph file format to store information about the memory use of your app. What you may not have known is that you can use Memgraphs with a number of our command-line tools.

First, you need to export a Memgraph from Xcode. This is really simple.

You just click the Export Memgraph button in the File menu and save it out.

Then, you can pass that Memgraph to the command-line tool instead of the target and you're good to go.

So I was running my app in Xcode 10, and I received a memory resource exception. This isn't cool. I should probably take a Memgraph and investigate this further. But what do I do next? Well, obviously to the terminal.

The first tool I often reach for is vmmap. It gives you a high-level breakdown of memory consumption in your app by printing the VM regions that are allocated to the process.

The summary flag is a great way to get started.

It prints details of the size in memory of the region, the amount of the region that's dirty, and the amount of memory that's swapped or compressed in iOS. And remember, it's this dirty and swap that's really important here.

One important point of note is the swap size gives you the precompressed size of your data, not what it compressed down to.

If you really need to dig deeper and you want more information, you can just run vmmap against the Memgraph , and you'll get contents of all of the regions. So we'll start by printing you the nonwritable region, so, like, your program's text or executable code, and then the writable regions, so the data sections, for instance. This is where your process heap will be. One really cool aside to all of this is that all these tools work really well with standard command-line utilities. So for example, I was profiling my app in VM Tracker the other day, and I saw the, an increase in the amount of dirty memory. So I took a Memgraph, and I want to find out, are any frameworks or libraries I'm linking to contributing to this dirty data? So here I've run vmmap against the Memgraph I took.

And I've used the pages flag. This means that vmmap will print out the number of pages instead of just raw bytes. I then piped that into grep, where I'm searching for a dylib, so I need dynamic library here.

And then, finally, I pipe that into a super simple awk script to sum up the dirty column and then print it out as the number of dirty pages at the end.

I think this is super cool, and I use it all the time. It allows you to compose really powerful debugging workflows for you and your teams. Another command-line utility that macOS developers might be familiar with already is leaks.

It tracks objects in the heap that aren't rooted anywhere at runtime. So remember, if you see an object in leaks, it's dirty memory that you can never free. Let's look at a leak in the memory debugger.

Here I've got 3 objects, all holding strong references to each other, creating a classic retain cycle.

So let's look at the same leak in the leaks tool. This year, leaks has been updated to not only show the leaked objects, but also the retain cycles that they belong to. And if malloc stack logging was enabled on the process, we'll even give you a backtrace for the root node. One question I often ask myself is, where's all my memory going? I've looked in vmmap, and I see the heap is really large, but what do I do about it next? Well, the heap tool provides all sorts of information about object allocations in the process heap. It helps you track down really large allocations or just lots of the same kind of object.

So here I've got a Memgraph that I took when Xcode caught a memory resource exception, and I want to investigate the heap. So I've passed it to heap, which is giving me information about the class name for each of those objects, the number of them, and some information about their average size and the total size for that class of object. So here I kind of see, like, not, lots and lots of small objects, but I don't think that's the problem. I, that, I don't think that's really the problem here.

By default, heap will sort by count.

But instead, what I want to see is the largest objects, not the most numerous, so passing the sortBySize flag to heap will cause it to sort by size.

Here I see a few of these enormous NSConcreteData objects. I should probably attach this output and the Memgraph to a bug report, but that's not going far enough, really. I should figure out where these came from.

First, I need to get the address for one of these NSConcreteData objects.

The addresses flag in heap. When you pass the addresses flag to heap with the name of a class, it'll give you an address for each instance on the heap.

So now I have these addresses, I can find out where one of these came from.

This is where malloc stack logging comes in handy.

When enabled, the system will record a backtrace for each allocation. These logs get captured up when we record a Memgraph, and they're used to annotate existing output for some of our tools.

You can enable it really easily in the scheme editor in the diagnostics tab.

I'd recommend using the live allocations option if you're going to use it with a Memgraph. So my malloc's, my Memgraph was captured in malloc stack logging.

Now, to find the backtrace for the allocation. This is where malloc history comes in helpful.

You just pass malloc history, the Memgraph, and an address for an instance in memory, and, if there was a backtrace captured for it, it'll give it to you. So here I've taken the address for one of those really big NSConcreteDatas. I've passed it to malloc history, and I've got a backtrace. And, interestingly, it looks like my NoirFilter's apply method here is creating that huge NS data.

I should probably attach this and the Memgraph to a bug report and get someone else to look at it. These are just a few of the ways you can deeply investigate the behavior of your app. So when faced with a memory problem, which tool do you pick? Well, there are 3 ways to think about this. Do you want to see object creation? Do you want to see what references an object or address in memory? Or do you just want to see how large an instance is? If malloc stack logging was enabled when you record, when you, when your process was started, malloc history can help you find the backtrace for that object.

If you just want to see what references an object in memory, you can use leaks and a bunch of options that it has in the page to help you there. And finally, if you just want to see how large a region or an instance is, vmmap and heap are the go-to tools. As a jumping off point, I'd recommend just running vmmap with the summary flag against a Memgraph taken of your process and then follow the thread down there. Now, I'd like to hand back to Kyle, who's going to talk about what can be some of the largest objects in iOS apps, and that's images.

Kyle? Thanks, James.

So images.

The most important thing about images to remember is that the memory use is related to the dimensions of the image, not its file size.

As an example, I have this really beautiful picture that I want to use as a wallpaper for an iPad app.

It measures 2048 by 1536, and the file on disk is 590 kilobytes. But how much memory does it use really? 10 megabytes. 10 megs, that's huge! And the reason why is because multiplying the number of pixels wide by high, 2048 by 1536, by 4 bytes per pixel gets you about 10 megabytes.

So why is it so much larger? Well, we have to talk about how images work on iOS. There's a load, a decode, and a render phase.

So the load phase takes this 590-kilobyte JPEG file, which is compressed, loads it into memory.

The decode converts that JPEG file into a format that the GPU can read. Now, this needs to be uncompressed, which makes it 10 megabytes.

Once it's been decoded, it can be rendered at will. For more information on images and how to kind of optimize them, I'd recommend checking out the Images and Graphics Best Practice session that was earlier this week. Now, 4 bytes per pixel we got by the SRGB format.

This is typically the most common format that images in graphics are. It's 8 bits per pixel, so you have 1 byte for red, 1 byte for green, and 1 byte for blue, and an alpha component.

However, we can go larger. iOS hardware can render wide format. Now, wide format, to get that expressive colors, requires 2 bytes per pixel, so we double the size of our image.

Cameras on the iPhone 7, 8, X, and the, some of the iPad Pros are great for capturing this high-fidelity content. You can also use it for super accurate colors for, like, sports logos and such.

But these are only really useful on the wide format displays, so we don't want to use this when we don't need to.

On the flip side, we can actually go smaller. Now, there's a luminance and alpha 8 format. This format stores a grayscale and an alpha value only.

This is typically used in shaders, so like Metal apps and stuff.

Not very common in our usage. We can actually get even smaller.

We can go down to what we call the alpha 8 format. Now, alpha 8 just has 1 channel, 1 byte per pixel. Very small. It's 75% smaller than SRGB.

Now, this is great for masks or text that's monochrome because we're using 75% less memory.

So if we look at the breakdown, we can go from 1 byte per pixel with alpha 8 all the way up to 8 bytes per pixel with wide format. There's a huge range. So what we really need to do is know how to pick the right format.

So how do we pick the right format? The short answer is don't pick the format. Let the format pick you.

If you migrate away from using the UIGraphics BeginImageContext WithOptions API, which has been in iOS since it began, and instead switch to the UIGraphics ImageRenderer format, you can save a lot of memory because the UIGraphics BeginImageContext WithOptions is always a 4-byte-per-pixel format.

It's always SRGB. So you don't get the wide format if you want it, and you don't get the 1-byte-per-pixel A8 format if you need it. Instead, if you use the UIGraphics ImageRenderer API, which came in iOS 10, as of iOS 12, it will automatically pick the best graphics format for you. Here's an example. Say I'm drawing a circle for a mask.

Now, using the old API with the highlighted code here is my drawing code, I'm getting a 4-byte-per-pixel format just to draw a black circle.

If I instead switch to the new API, I'm using the exact same drawing code.

Just using the new API, I'm now getting a 1-byte-per-pixel image. This means that it's 75% less memory use. That's a great savings and the same fidelity. As an additional bonus, if I want to use this mask over again, I can change the tint color on an image view, and that will just change it with a multiply, meaning that I don't have to allocate any more memory. So I can use this not just as a black circle, but as a blue circle, red circle, green circle with no additional memory cost. It's really cool.

One other thing that we typically do with images is downsample them.

So when we want to make like a thumbnail or something, we want to scale it down. What we don't want to do is use a UIImage for the downscaling. If we actually use UIImage to draw, it's a little bit less performant due to internal coordinate space transforms. And, as we saw earlier, it would decompress the entire image in the memory.

Instead, there's this ImageIO framework.

ImageIO can actually downsample the image, and it will use a streaming API such that you only pay the dirty memory cost of the resulting image. So this will save you a memory spike. As an example, here's some code where I get a file on disk. This could also be a file I downloaded.

And I'm using the UIImage to draw into a smaller rect. This is still going to have that big spike.

Now, instead, if I switch to ImageIO, I still have to load the file from disk.

I set up some parameters because it's a lower-level API to say how big I want this image to be, and then I just ask it to create it with CGImageSource CreateThumbnail AtIndex. Now, that CG image I can wrap in a UIImage, and I'm good to go. I've got a much smaller image, and it's about 50% faster than that previous code.

Now, another thing we want to talk about is how to optimize when in the background.

Say I have an image in an app, full screen. It's beautiful. I'm loving it. But then, I need to go to my Home screen to take care of a notification or go to a different app.

That image is still in memory.

So as a good rule of thumb, we recommend unloading large resources you cannot see. There are 2 ways to do this. The first is the app life cycle. So if you background your app or foreground it, the app life cycle events are great to, are a great way to know.

Now, this applies to mostly the on-screen views because those don't conform to the UIViewController appearance life cycle.

UIViewController methods are great for, like, tab controllers or navigation controllers because you're going to have multiple view controllers, but only 1 of them is on screen at once.

So if you leverage like the viewWillAppear and viewDidDisappear code or callbacks, you can keep your memory footprint smaller.

Now, as an example, if I register for the notifications for the application entering the background, I can unload my large assets -- in this case, images.

When the app comes back to the foreground, I get a notification for that.

If I reload my images there, I'm saving memory when in the background, and I'm keeping the same fidelity when the user comes back. It's completely transparent to them, but more memory is available to the system. Similarly, if I'm in a nav controller or a tab controller, my view controllers can unload their images when they disappear. And before they come back with the viewWillAppear method, I can reload them. So again, the user doesn't notice anything's different. Our apps are just using less memory, which is great. And now, I'd like to invite up Kris to kind of bring this all together in a nice demo.

Kris? Okay. I'm going to switch to the demo machine.

There we go. So I've been working on this app. What it does is it starts with these really high-resolution images from our solar system that I got from NASA, and it lets you apply different filters to them. And here we can see a quick example, applying a filter to our Sun. I'm really pleased with how it's going so far, so I sent it off to James to get his opinion on it, and he sent me back an email with 2 attachments.

One was a Memgraph file, and the other one was this image.

Now, James is a pretty reserved and understated guy, so when he's got 2 red exclamation points and a scream emoji, I know he's pretty upset.

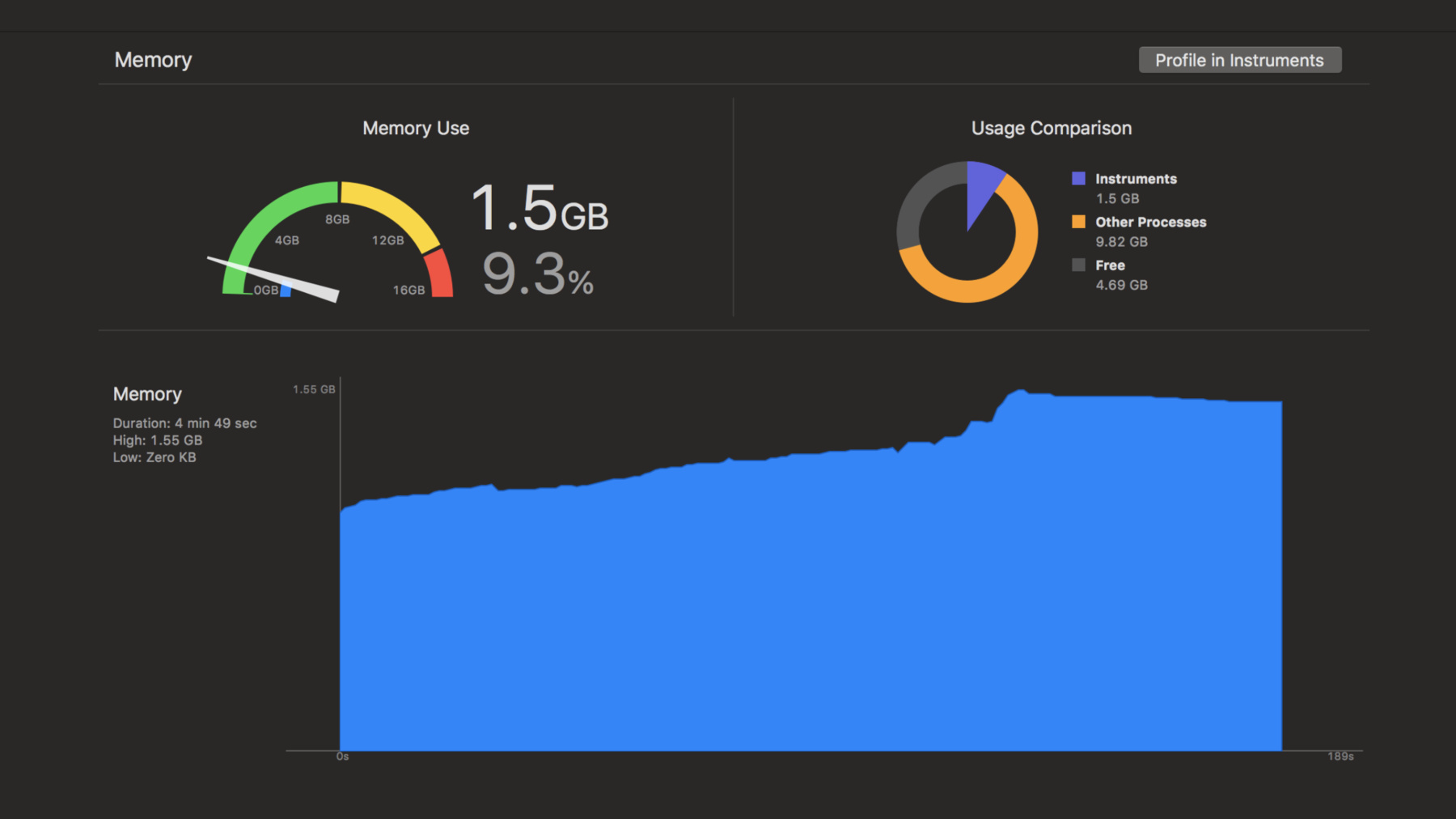

So I went to James and I said, "You know, I don't understand what the big deal is. I clearly have at least half a gigabyte before I'm even in the red, you know. I have all this available memory. Shouldn't I be using it?" And James, who's a much better developer than I am, pointed out a few things that's, a few things that are wrong with my logic. First of all, this gauge is measuring a device with 2 gigabytes of memory.

Not all our devices have that much memory. If this code was running on a device with a, only 1 gigabyte of memory, there's a good chance our app would already be terminated by the operating system.

Second, the operating system doesn't just, doesn't use just how much memory your app is using when designing when to terminate your app, but also what else is going on in the operating system. So just because we're not to the red yet doesn't mean we're not in danger of being terminated.

And third, this represents a terrible experience for the user. In fact, if you look at the usage comparison chart, you can see other processes has zero kilobytes of memory.

That's because they've all been jettisoned by the operating system to make room for our app. You should all take a good look at me and give me the stink eye because now when the user has to go watch your app, you have to load from scratch.

So James makes some pretty good points, so I think, in general, we want to get this needle as far to the left as possible instead of as far to the right.

So let's see what we can do. Let me go ahead and take a look at the Memgraph file. And I have a couple go-to tricks that I use when working with a Memgraph file or go-to strategies. And the first -- actually, let me bring this up a little bit -- is to look for leaks. So if I go down to the Filter toolbar and click on the leaks filter, that'll show me just any leaks that are in my Memgraph file.

Turns out this Memgraph file has no leaks. Well, that's both kind of good news and bad news. It's great that there are no leaks, but now I have to figure out what's actually going on here.

The other thing that Memgraph is really good for is showing me how many instances of an object are in memory and if there's more than I expect.

But if I look at this Memgraph, I can see if I actually just focus specifically on the objects from my code, there's only 5 in memory, and there's actually only 1 of each of these. If there were, you know, multiple root view controllers, or multiple noir filters, or multiple filters in memory, more than I expect, that's something else I could investigate.

Well, there's no more instances here than I expect, but maybe one of these is really big. It's not very likely, but I might as well check. So I'm going to go to the memory inspector. I'm going to look at these. Each of them, it lists the size for each of the objects. So I can see my app delegate is 32 bytes. The data view controller is 1500. As I go through each of these, none of these are clearly responsible for the, you know, 1 plus gigabytes of memory my app is using.

So that's it for my bag of tricks in dealing with Memgraph in Xcode. Where do I go now? Well, I just watched this great WWDC session about using command-line tools in Memgraph files.

So let me see if I can find anything by trying that. And thinking back, the first thing James suggested was using vmmap with the summary flag.

So let me give that a try, and let me pass in my Memgraph file. And let's take a look at this output.

So now, what should I be looking for in here? Now, in general, I'm looking for really big numbers. I'm trying to figure out what's using all this memory, and the bigger numbers mean more memory use. Now, there's a number of columns here, and, you know, some of them are more important than others. First of all, virtual size, I mean, virtual means not real. I can almost practically ignore this column. It's memory that the app has requested but isn't necessarily using.

Dirty sounds like something I definitely don't want in my app. I'd much rather my app be clean than dirty, so that's probably something I want smaller. And then, swapped, which, because this is iOS, is compressed, remembering back to both Kyle and James pointed out that it's the dirty size plus the compressed size that the operating system uses to determine how much memory my app is really using. So those are the two columns I really want to concentrate on, so let's look for some big numbers there. I can see immediately CG image jumps out. It has a very big dirty size and a very big swapped size. That's a giant red flag, but let's keep looking.

I can see this is IOSurface has a pretty big dirty size but no swapped size. MALLOC LARGE has a big dirty size but a really small or smaller swapped size. And there's nothing else in here that's really that big. So I think, based on what I see here, I really want to concentrate on the CG image VM regions.

So I'm going to go ahead and copy that.

So what's the next step? Well, we want to know more about some virtual memory, so vmmap seems like the place to go again.

This time, instead of the summary flag, I'm just going to pass my Memgraph file.

But I really only care about the CG image memory. I don't care about all the other virtual memory regions that vmmap will tell me about. So I'm going to go ahead and use grep to just show me the lines that talk about CG image. So let's see what that looks like.

So now, I have three lines. I see there's two virtual memory regions. There, and I see their start address and their end address. And then, I can see these are the same columns as above. This is virtual, resident, dirty, and compressed. And this last line here is actually the summary line again.

So that's the same data that was above.

Looking at my two regions, I can see I have a really small region and a really big region. That big region is clearly what I want to know more about. So how can I find out more about that particular VM region? Well, I went looking through the documentation from vmmap, and I noticed it has this verbose flag, which, as the name implies, outputs a lot more information. And I wonder what that can tell me.

So let's go ahead and pass verbose and the Memgraph file.

And again, I only care about CG image regions, so I want to use grep to filter to just those.

Oh, now I see there's actually a lot more regions. What's going on here? Well, it turns out that vmmap, by default, if it finds contiguous regions, it collapses them together. And in fact, if you look starting on the second line here, the end address of this region is the same as the starting address of this one. And that pattern continues all the way down. So vmmap, by default, collapses those into a single region. But looking at the details here, I can see there are actually some differences. And in particular, some of these use a lot more dirty memory and some have a lot more compressed memory, so this gives me an idea of maybe something I want to focus on. But I'm actually going to use a different strategy here. I know that the operating system, not necessarily, but as a general rule, the later the VM region was created, the later in my app's life cycle it happened. And since this Memgraph was taken during this huge spike in memory use, chances are these later regions are more closely tied to whatever caused that spike.

So instead of trying to find the one with the biggest dirty and compressed size, I'm going to go ahead and just start at the end here. I'm going to grab the beginning address of that final region.

Now, where do I go from here? Well, one of the tools that James mentioned was heap, but that's about objects on the heap, and I'm dealing with a virtual memory region, so that doesn't help. Then, there's leaks, but leaks, I don't have a leak here. I already know from looking at the Memgraph there's no leaks, so that doesn't seem like the tool I want to use. But I went looking through the help information for leaks, and it turns out leaks can do lots of things and including telling me who has references to either an object on the heap or virtual memory region. So let's go ahead and see what that tells us.

So I'm going to use leaks, and then I'm going to pass this traceTree flag.

And what that does is it gives me a tree view of everything that has a reference to the address I'm passing in. In this case, I'm passing in the starting address of my virtual memory region. And then, finally, we give it the Memgraph file.

So what does this look like? So what we see here is this tree of all these references. If we scroll up to the top, which is way up here, I can actually see here's my VM region, here's my CG image region, and then I can see there's a tree view here of all the things that have references, and what references them, and what references them, and so on and so forth. And in fact, if we go back to Xcode, and we actually filter on the same address, and I go ahead and look at this object, this tree is the exact same tree I see from leaks. And if I wanted to, I could go through and expand every single one of these nodes and look at the details for each of them, but that's going to take a while, and it's kind of tedious. The nice thing about the leaks output is not only can I kind of quickly scan through it, if I want to, I can search or filter it, or I can put it into a bug report or an email, which I can't really do with the graphical view that's in Xcode.

So what am I looking for here in this output? Well, ideally, I would find something, a class that I'm responsible for, a class from my application. I happen to have looked through this already, and I know there's none of my classes in here, so what's the next best thing? Well, a class that I know I'm creating, like a framework class, that's either being created on my behalf or that I'm directly creating. So I know that, you know, my app has UI views. It has UI images. And I'm using these core image classes to do the filtering. And so if we go ahead and we look through here, and I'm using a very sophisticated debugging tool called my eyeballs.

And we go ahead and we look for -- let me see if I can find what I want. It's a very big terminal output. Makes it a little more confusing.

Well, so, for example, you know, here's a font reference, and I know, you know, my application uses fonts, but chances are the fonts aren't responsible for a lot of my memory use, so that's not going to help. If we go down further, I can actually see there's a number of these CI classes, and those are the core image filters, or that's core image, you know, classes it's creating to do the filtering work in my application. So that may be something I want to investigate further as well. I happen to have already done that and haven't found anything useful. So I can't really get anywhere further looking at the leaks output, which is unfortunate. So what should I go to next? Fortunately, James had memory-backed trace recording, allocation-backed trace recording turned on when he captured this Memgraph, which means I can use the other tool he talked about to look at the creation backtrace of my object. So I'm going to use malloc history.

And this time, I pass it, the Memgraph file, first. And then, I'm going to pass it from the help documentation, this fullStacks flag.

And what that does is it prints out each frame on its own line, makes it a lot more human readable. And then, I'm going to pass it the starting memory address of my VM region.

Let's see what this looks like.

Well, this actually is not that big of a backtrace, and I can see actually my code appears on, here on several lines. Lines 6 through 9 actually come straight from my application code, and I can see here on line 6 that my NoirFilter apply function is what is responsible for creating this particular VM region. So that's pretty good smoking gun as to where I want to look in my app for who's creating all this memory. And in fact, if we go back to the Memgraph file, I can actually see that's the same backtrace that appears in Xcode here. You can actually see right here is also the NoirFilter apply method. We don't get the nice highlighting you normally see in the backtrace view here because we're not debugging a live process. We're loading a Memgraph file. But you can see it's the exact same output as we get from malloc history. And in fact, to just kind of a confirm things even further, if I go ahead and I look at my full list of CG image VM regions and I collect, I grab the second one from the bottom, the next one up, and let's look at the backtrace for that one.

And it turns out it's the same backtrace. So the same code path is responsible for that region as well. And in fact, looking at several of those regions, it actually uses the same backtrace. So now, I have a really good idea of what in my application is responsible for creating these VM regions that are using up a whole bunch of the memory in my application.

So what can we do about it? Well, let's go back to Xcode, and I can go ahead and close the Memgraph file.

And the first thing I want to do is let's take a look at the code here.

If I look at my filter, I can see right here is the apply function, and I can actually see right away something jumps out at me, which is I'm using the UIGraphicsBegin ImageContext WithOptions and the UIGraphicsEnd ImageContext, which I remember Kyle said you shouldn't be using. There's a better API to use in those circumstances. So that's something I definitely want to come back to, but the first thing I need is I need some kind of baseline. I need to get an idea of how much memory my app is using so I can make sure my changes are making a difference.

So I'm going to go ahead and run the application, and I'm going to go to the debug navigator and look at the memory report. So now, I can see the memory my app is using as I run it.

Now, I really like this image of Saturn's north pole. It's this weird hexagon shape, which is both kind of cool and a little freaky. So let's take a look at that, and let's apply the filter and see what we get.

So 1 gig, 3 gigs, 4 gigs, 6 gigs, 7 gigs. This is bad. And actually, this actually brings up a good point, which is this would not fly on a device at all. So when you're running in the simulator, you have to remember that it's useful for debugging and testing changes, but you need to validate all that stuff on devices as well. But the other thing that's nice is the simulator is never going to run out of memory. So if I have a case where my app is getting shut down on a device, maybe try it in the simulator. I could see what's, you know, I can wait for a really big allocation, not get shut down, and then investigate it from there.

And one thing I would like to point out is actually we do show you the high-water mark over here. And in this case, I'm up to 7.7 gigabytes.

It's terrible.

So let's see what we can do about that. I'm going to go back to my apply function. And now, you know, I do want to come back to this beginImageContext WithOptions thing, but thinking back to what Kyle said, when you're dealing with images, what's the most important thing in terms of memory use? It's the image size, so let's take a look at what that looks like. I'm going to go ahead and apply the filter again. And then, once I'm stopped in the debugger, I want to go ahead and see the size of this image. And I'm actually just going to take a sip of water before I hit return here. Actually, I'm not going to have any water.

This is 15,000 by 13,000. Now, I checked in the documentation. On UIImage that size, that's points, not pixels. If this is a 2X device or a 3X device, you have to multiply that by a big number. You know, Kyle was upset because an image was taking 10 megabytes. Nobody tell him about this.

And in fact, just to confirm things, I want to try this. I'm going to do a, the 15,000 times 13,000, and the iPhone X is a 3X device, so it's 3 times the width times 3 times the height times 4 bytes per pixel.

That number looks kind of familiar.

So I'm pretty sure I know exactly what's using up my 7-and-a-half gigabytes of memory, and it's not necessarily my beginImageContext thing. It's the size of this image. And there's no reason the image needs to be this big. What I want to do is scale it down so it's the same dimensions as my view. And that way, it'll take up far less memory.

So if I go back to the image loading code that's up here -- actually, before I do that, I want to go ahead and disable this break point -- so let's take a look at what this does. Well, it's pretty straightforward. It's getting the URL from a bundle, it's loading some data from that URL and loading it into UIImage, then that, then, which gets passed to the filter. So what I want to do is, before I send it to the filter, I want to scale down the image. However, I remember what Kyle said. I don't want to do the scaling on UIImage because it still ends up just loading that whole image into memory anyway, which is what I'm trying to avoid. So I'm going to go ahead and let's collapse this function. And I'm going to replace it with the code Kyle suggested. Okay, so let's take a look at what this code is doing.

So here again, we're getting the image from the bundle, but now, this time -- just a little lighter -- I'm calling CGImageSource CreateWithURL to get a reference to the image and then passing that to CGImageSource CreateThumbnail AtIndex. So now, I can scale the image to the size I want without having to load the whole thing into memory.

Let's give this a shot and see if it makes a difference. I'm going to go ahead and rebuild and wait for it to launch on the application.

And then, once it's there -- oh, there's a warning. I have an extra this.

Let's see.

Okay, building.

Building, building, building.

Okay, launching. Good. All right. Now, let's go ahead and take a look at the memory report.

Let's go back to Saturn's north pole, which is something I've always wanted to say.

And let's apply our image and see what it goes to now. So now, we're at 75, 93 megabytes. Our high-water mark in this case is 93 megabytes. Significant improvement.

Much better than the almost guaranteed to get shut down 7-and-a-half gigabytes.

But now, I remember there's actually, I want to go back, and let's go ahead and stop. And I still want to go back to my filter method and change this UIBeginImageContext code and do what Kyle suggested here. So I'm going to go ahead and delete this code and add in my new filter.

So now, in this case, I'm going ahead and creating a UIGraphics ImageRenderer. And I'm using my CI filter within the renderer to do the filter, apply the filter. Let's go ahead and run this -- hopefully, it'll build -- and see if this happens to make any difference in terms of my memory usage.

So let's go back to the debug navigator and to the memory report.

And once again, we get to go back to Saturn, and let's go ahead and apply our filter.

Now, let's see what our high-water mark ends up this time. Ninety-eight. So that's actually the same, but it turns out that's, if you think about it, that's what I expect. My image is still going to be using, in this circumstance, 4 bytes per pixel, so I'm not actually getting any memory savings by using this new method. However, if there was an opportunity for memory savings -- for example, you know, if the operating system could determine that it could use fewer bytes per pixel or if it determined that it needed to use more, it would do the right thing, and I don't need to worry about it. So even though I don't see a big improvement, I know my code is still better for having made these changes.

So there's still more I could do here, right. I want to make sure we unload the image when the app goes into the background, and I want to make sure we're not showing any images in views that aren't on screen. There's a lot more I can do here, but I'm really pleased with these results, and I want to send them back to James. So I'm going to go ahead and grab a screenshot and add a little note to it for James just to show him how pleased I am with all of this. And I think we're going to go ahead and send him the starry-eyed emoji.

And hopefully James will be happy with these results. So now, I'm going to hand it back to Kyle, who's going to wrap things up for us. Thank you.

Thanks, Kris.

Thanks, Kris.

That was awesome. With just a little bit of work, we were able to greatly reduce our memory use by orders of magnitude.

So in summary, memory is finite and shared.

The more we use, the less the system has for others to use it. We really need to be good citizens, and be mindful of our memory use, and only use what we need.

When we're debugging, that memory report in Xcode is crucial. We can just turn it on when our app is running because then, as we monitor it, the more we can notice the regressions as we're debugging.

We want to make sure that iOS picks our image formats for us. We can save 75% memory from SRGB to alpha 8 just by picking the or by using the new UIImage GraphicsRenderer APIs. It's really great for masks and text.

Also, we want to use ImageIO when we downsample our images.

It provides us or prevents a memory spike, and it's faster than trying to draw a UIImage into a smaller context. Finally, we want to unload large images and resources that are not on the screen. There's no sense in using that memory because the user can't see them.

And even after all of that, we're still not done.

As we just saw, using Memgraphs can help us further understand what's going on and reduce our memory footprint. That combined with malloc history gives us great insight into where our memory's going and what it's being used by.

So what I'd recommend is everyone go out, turn on malloc history, profile your tool, and start digging in.

So for more information, you can go to our slide presentation. And also, we'll be down in the technology labs shortly after this for a little bit if you have additional questions for us.

Thanks, and have a great remainder of WWDC.

[ Applause ]

-