-

ResearchKitの新機能

ResearchKitは、デベロッパがリサーチおよびケア用Appをビルドする方法をより簡単にし続けています。 最新のResearchKitのアップデートが、リサーチャーが収集できるデータの限界をどのように拡大させているかをお伝えします。エンハンスドオンボーディング、調査実施のための拡大されたオプション、新しいアクティブタスクなどの機能について学びましょう。デベロッパが革新的なAppを作成し、医療チームや研究組織にパワーを与えられるよう、Appleがどのようにこのフレームワークを活用するために研究組織と提携しているかもお伝えします。

リソース

関連ビデオ

WWDC20

-

このビデオを検索

Hello and welcome to WWDC. Hello everybody and welcome to What's New in ResearchKit. My name is Pariece McKinney and I'm a software engineer on the health team. Later in the talk, we'll also be joined by my fellow colleague and software engineer Joey LaBarck. Thank you for taking the time to join. And we're extremely excited to show you all the new updates ResearchKit has to offer. There's quite a bit to cover so let's jump right in. In order to make things easier to follow this year we created a ResearchKit sticker pack where each sticker corresponds to a particular topic of this talk. At the end of each topic we'll collect a sticker for that particular subject and slap it on the back of our laptop which is pretty empty at the moment.

Now that everyone knows how to follow along. Let's get started with our first topic, community updates. Each year we're excited about the new apps that take advantage of our frameworks to advance health and learnings and various health areas. To name a few, the Spirit Trial app built by Thread, was created in support of a clinical trial on advanced pancreatic cancer. Also Care Evolution and the NIH launched the All of Us app to speed up health research and breakthroughs by building a community of a million or more people across the U.S. with the aim to advance personalized medicine. We've also seen apps utilize our frameworks to build high quality apps very quickly in response to COVID-19. The Stanford First Responder app aims to help first responders navigate the challenges of COVID-19 and the University of Nebraska Medical Center's One Check app aims to provide real-time situational awareness of COVID-19 to investigators. Last year, Apple also announced and released the Research app which heavily utilizes ResearchKit while paving the way for conducting large scale studies all through your iPhone. Last year, we also announced that we would release a newly redesigned website and we were proud to share that website with you which launched in late 2019 at researchandcare.org. On our overview page you can read about the frameworks and their capabilities and features before diving in to create your own app. If you navigate to the ResearchKit page you can find even more information about the models it provides as well as case studies that showcase amazing examples of studies and programs built in the community like the one you see here. We also announced our new investigator support program to which researchers can submit proposals for watchers who support their studies. You can now read about that on our website and learn how to reach out to us if you're interested in the program. And lastly we welcome all of you to reach out to us through our website so that we can hear about all the amazing work you all are doing. Now that we've collected our community update sticker let's move on to the next topic which is onboarding updates.

For the vast majority of study based apps, the onboarding views are usually the first thing your participants will see and interact with. Knowing this is extremely important to convey exactly what the study is and what the participants should expect if they decide to join. As you can see here we move towards leaning on the instruction steps capability to support custom text and images so that you can have complete control over the content you wish to display. Let's take a look at the code to create this step.

After importing ResearchKit, the first thing we'll do is initialize the instructions step and pass in a unique identifier. After setting the title and detail text, the last thing we have to do is set the image property to the "health_blocks" image seen in the previous slide. In the second step of our onboarding flow using a instruction step again but this time we also incorporate body items which is an extremely useful feature to further educate your participants. In this example we use SF Symbols for our icons but it's important to note that everyone watching this video has access to these icons and more. So if you're interested feel free to the SF Symbols app to find the icons that match your use case. Let's take a look at the code to create this step. Much like the previous code slide we initialize our ORKInstructionStep and pass in our unique identifier. After setting our title property, we also set our image property again. But this time we pass in an "informed_consent" image seen in the slide before. Next we initialize our first body item making sure that we pass an image and that we set our bodyItemStyle to .image. The last thing we have to do is upend our newly created bodyItem to the bodyItems array that sits on the InstructionStep.

Now let's take a look at an enhancement we've made to our web view step. Previously presenting user with an overview the consent document and collecting the signature for it were handled by two different steps. Now we've added the signature capture functionality to the web view step so that you can present the overview of the consent document and ask for the participant signature within the same view. Let's see how this step is created.

The first thing we have to do is initialize the ORKWebViewStep passing in an identifier and the html content you wish to display. And the last thing we have to do is set the showSignatureAfterContent attribute the true. And this will ensure that the signature view is shown below the html content when the step is presented. This year we're also introducing the request permission step. Previously if you wanted to request access to health data you would have to do so outside of the ResearchKit flow which means you had to create the necessary views to ask for access and maintain those views yourself. Now all you have to do is initialize the request permission step and pass in the health care types you want access to and we'll do the heavy lifting requesting the data for you. Now you can do more with less code while making the experience and flow of your app much better.

Let's take a look at the code to create the ORKRequestPermissionStep.

We start off by creating a set of HKSampleTypes and these represent the types you want right access for. Then we create a set of HKObjectTypes and these represent the types you want read access for. Next we'll initialize the ORKHealthKitPermissionType making sure that we pass in the hkTypesToWrite and hkTypesToRead sets created above. And the last thing we have to do is initialize the ORKHealthKitPermissionStep and passing an array of permission types that currently only has our ORKHealthKitPermissionType within it.

That brings us to a close for the onboarding update section so let's collect our sticker. Now that we have our sticker let's keep moving forward and talk about survey enhancements. Before we show any questions we always want to give the user some insight on what the point of the survey is. To do this using an instruction step again to provide some brief context. But this time we also provide an icon image that is left aligned to the screen which is all handled by ResearchKit. And the second step with the onboarding survey, we use an ORKFormStep to collect basic information about the participant. But as you can see here we made some UI improvements by using labels to display errors as opposed to previously using alerts which didn't always make for the best user experience. Let's fix those errors and move on to the next step in our onboarding survey.

In the third step we preview the new SESAnswerFormat, which can help present scale based questions much like the example here, where we ask the user to select the option that they feel best depicts the current state of their health. Let's look at the code needed to present this step.

The first thing we do is initialize the SESAnswerFormat and pass in the top rung text and bottom rung text as seen here. The last thing we have to do is simply initialize the ORKFormItem and pass in the SESAnswerFormat created above. In this step we use a continuous scale answer format and a scale answer format to get information on the participants current stress level and pain level. In the past, if the user wasn't comfortable enough to answer the question or simply didn't know the answer, they would either leave the question blank or provide an answer that wasn't accurate because the question might be required. Now we've added the ability to use the ORKDontKnow button with select answer formats. This will allow the participants to select the "I Don't Know" button when they don't want to answer the questions presented to them. You can also pass in custom text as seen here to replace the default I don't know text. Let's take a look at the code for the second skill question to see how we added the don't know button and added custom text. First we initialize the ORKScaleAnswerFormat and pass in all the required values. Next we set the shouldShowDontKnowButton attribute to true. Then we set the customDontKnowButtonText to "Prefer not to answer". And this will override the default text "I Don't Know". The last thing we have to do is initialize ORKFormItem and pass in the scale answer format created above. And the last question in the survey we use an ORKTextAnswerFormat to collect any additional information the participant thinks we should know. Previously we supported setting a maximum character count but there was no visual to let you know what the limit is or how close they were to approaching it. Now we've added a maximum character count label so that the user can have a much better idea of how much information they can provide and base their response off that. We've also added a "Clear" button so that the user can remove any text that they've typed in. Let's check out the code to make this happen.

First we initialize a ORKTextAnswerFormat.

Then we begin to set some properties on the answer format such as setting multipleLines to true. Setting maximumLength to 280. And setting hideCharacterCountLabel and hideClearButton to false to make sure that both of these UI elements are shown when this step is presented. And the last thing we have to do is initialize ORKQuestionStep in passing the textAnswerFormat created above. At the finish of the Onboarding Survey we present the participant with the new ORKReviewViewController. One of the biggest challenges for any study is making sure that the data entered by the participant is accurate. As humans, making mistakes in our everyday lives it's very common. So when participants fill out surveys it might be safe to assume that a small mistake might have happened. To help alleviate this problem, ResearchKit now provides a ReviewViewController that will allow the participant to view a breakdown of all the questions they were asked and the response they gave. If they want to update any of those questions they can simply click edit and update their answer. Let's look at the code to present the ReviewViewController. First we initialize the ReviewViewController which requires us to pass in a task, and a result object which in this case we get from the taskViewController object, passed back to us by the didFinishWithReason delegate method. But keep in mind that you can also initialize your task separately and also pass in a result that may have been saved at an earlier time. Next we set ourself as the delegate. And this requires us to implement The didUpdateResult and didSelectIncompleteCell methods. Then after setting a reviewTitle and text we're done creating our first ReviewViewController. Now that we finished reviewing our survey enhancements and collected our well-deserved sticker, let's move on to the next topic which is Active Tasks. Let's first take a look at the improvements we've made to our hearing task. For the environment SPL meters that we added a new animation that clearly indicates if you're within the set threshold for background noise as seen here. We also made updates to our dB HL tone audiometry step by tweaking the button UI, adding better haptics, changing the progress indicator to a label to make it more clear to the participant how far they've made it. And we also added calibration data to support AirPod Pros. Let's collect our hearing sticker before moving on.

Now that we have our hearing sticker let's chat about our next topic which is 3D models. We're running a study giving your participants clear and informative visuals to explain a specific concept can be invaluable, especially if they can also interact with it. Using 3D models to do this is by far one of the best solutions to educate your participants while also increasing engagement. However writing the code necessary to present 3D models and maintaining it can be cumbersome to say the least. So whether you're trying to present something as simple as a human hand or something more complex such as the human muscular system, we've made the process of presenting 3D models much easier for you by creating two new classes. Those two classes are the ORK3DModelStep and the ORKUSDZModelManager. Using these two classes, you can now quickly present 3D models within your ResearchKit app by first, adding a USDZ file to your Xcode project. Second, creating a USDZModelManager instance and passing in the name of the desired USDZ file that was imported in your project. And third initialize an ORK3DModelStep with the USDZModelManager instance and present it. And just like that you can now present high quality 3D models that your users can interact with and touch without having to maintain any of the code yourself.

Before moving on, we wanted to point out that we're well aware that creating your own model can take a good amount of time. However, there are models accessible to you online that you can download for free to practice with So an upcoming example uses a toy robot and a toy drummer 3D model that are both publicly available at the URL seen here. Let's get started. And the first example, we'll present the toy robot model. Selection has been enabled and the user is required to touch any part of the model before continuing.

In the second example we'll present the drummer model. Selection is disabled with certain objects on the model have been pre highlighted to draw the user's attention. The user also has full control to inspect the highlighted areas. Let's take a look at some code to see how simple it is to present a 3D model.

First, we initialize our USDZModelManager passing in the name of the USDZ file that we wish to present. Next, we setup a few properties on the ModelManager, such as allowSelection, highlightColor and setting enableContinueAfterSelection to false to ensure that the user isn't blocked from moving forward. Next we pass an optional array of identifiers where each identifier matches a specific object on the model we want to highlight before we present it. Then the last thing we do is initialize the ORK3DModelStep and pass in the USDZModelManager created above. So some of you might be wondering, why create a ModelManager class instead of just adding that functionality to the 3DModelStep itself. And the reason is to make the process of creating a custom 3D model experience much easier for any developer interested in doing so. To understand it further, we need to learn about the parent class of the USDZModelManager which is the ORK3DModelManager. Let's take a look. The ORK3DModelManager class is an abstract class that we've created which shouldn't be used directly. The point of the 3DModelManager is to be subclassed while requiring the subclass to implement specific features that we believe every 3D model experience should have. So after creating your subclass and making sure that these features are handled, you can then move forward to add all the extra functionality you want. As seen here with the USDZModelManager. At the talk word in here we definitely believe they using the USDZModelManager could create endless possibilities for your ResearchKit app. However, we are open source and we always encourage members of the community to contribute to help push ResearchKit forward. With that being said we're excited to announce that someone from the community has also taken the opportunity to create their own ModelManager class.

BioDigital, an interactive 3D software platform for anatomy visualization has provided the ORKBioDigitalModelManager class so that they're already powerful iOS SDK can now be integrated easily into any ResearchKit project. Some of their features includes: presenting custom models created via the admin portal, programmatically adding labels, colors, and annotations to any model loaded within your app. And since all of BioDigital models are loaded via the web, you can dynamically add new models to your project without having to update any code. Let's see a couple examples in action. In the first example, we use an instruction step to inform the user that we'll present an interactive human model. This can be used in many situations and most of us have experienced, such as visiting a physical therapy clinic or the Orthopedic physician's office where you're usually given a piece of paper to describe your pain or circle the area on some kind of picture. Now we can get rid of paper and make the experience much better. As you see here we've loaded our model while also being presented with a card that contains useful information that can be updated via BioDigital's admin portal or their SDK.

Users can also interact with the model so that they can reach and view the exact areas of interest. After clicking on the muscle where pain has been experienced we're also presented with a label that can give us even more information on that specific organ. This can be updated via BioDigital's admin portal or locally through their SDK. In the next example we imagine a scenario where a patient has visited a hospital for chest pain. After receiving a C.T. scan, the physician would like to give a visual to show the patient the exact arteries that are experiencing blockages. To do this we'll present an interactive 3D human heart model with dynamically added annotations to specific coronary arteries all done directly through BioDigitalModelManager class. As you can see here, we presented our heart model along with another card view for additional information. The user can then interact with the heart model and select the programmatically added annotations to find more information on the severity of each individual blockage. Let's take a look at the code to present the animated heart model.

After importing ResearchKit and HumanKit which is provided by BioDigital we first initialize ORKBioDigitalModelManager instance... Then we set a couple of properties that were inherited from the ORK3DModelManager class such as highlight color and identifiers of objects to highlight.

Then we focus on some properties and instance methods added by BioDigital, such as identifiers of objects to hide, the load method where we pass in the I.D. of the model we want to present, in this case the heart model. And the annotate method where we pass an identifier the object we want to annotate. In this case the right coronary artery. After setting the title in text the last thing we have to do is initialize the ORK3DModelStep and pass in the bioDigitalModelManager created above. To find out more information about BioDigital and their SDK visit their GitHub page, seen here. Now that we collected our 3D model sticker I'll hand things over to my teammate Joey to talk about building a custom active task. Take it away Joey.

Thanks Pariece for those awesome updates coming to ResearchKit. Today, I'm going to be showing you how to create your very own custom active task.

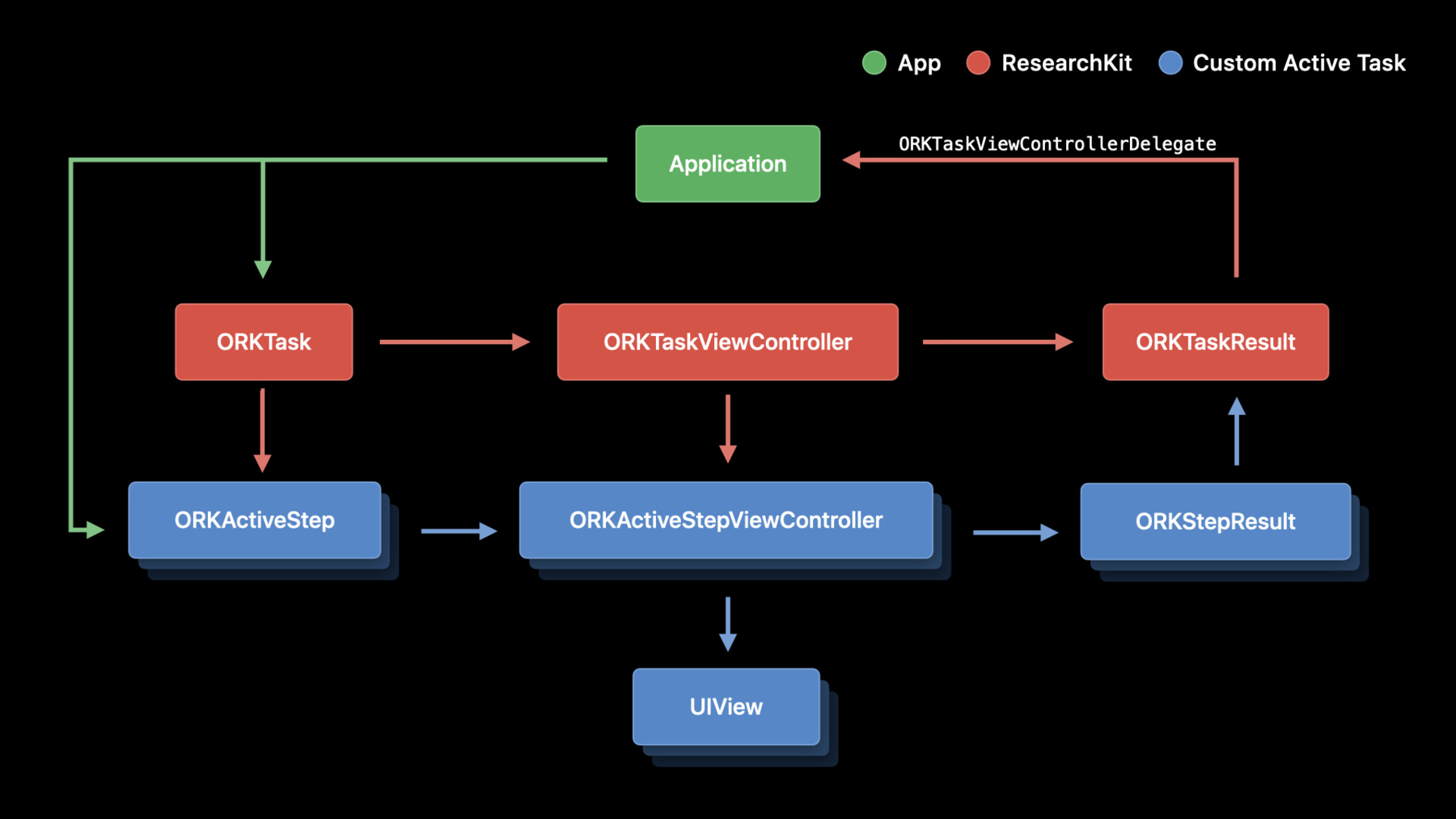

So we've collected a bunch of stickers already. So to collect our front facing camera sticker, we will walk through the process of creating an active task in ResearchKit. Then we will open Xcode and implement a custom application to show off our new task. Our task is going to show the user a preview of what they're recording in real time. We'll let the user control when to start and stop recording and show a timer for how long they've been recording for. Additionally, the user will have the opportunity to review and retry the recording in case they want another take. Before we get into it, I want to give you a quick refresher on the relevant classes and protocols included in ResearchKit to help you accomplish this. First your application needs to create an ORKTask object. ORKTask is a protocol which your app can use to reference a collection of various step objects. Most applications can use the concrete ORKOrdered or ORKNavigableOrder task included in ResearchKit. The task object you create is than injected into an ORKTaskViewController object. This object is responsible for showing each step in your task as they are dequeued. Your application has no need to subclass ORKTaskViewController so you can use it as is.

Additionally ORKTaskViewController is a subclass of UIViewController internally so you can present it in your app as you normally would any other view controller in UIKit. Finally, the ORKTaskResult is an object which contains the aggregate results for each step in your task.

The results that are collected from the task are then delegated back to your application upon completion of the task using the ORKTaskViewController delegate. This is the essential roundtrip from your application into ResearchKit and then back. Since I'm going to be showing you how to create your very own active task we need to dive one level deeper with some coding examples that will set up our active task. So first our application needs to create a collection of ORKStep and ORKActiveStep objects to make up the data model of our task. Since we will be creating a front facing camera active task we will really create a task which includes an active step subclass. First import the ORKActiveStep header from ResearchKit and create a new subclass of ORKActiveStep. We'll name this class ORKFrontFacingCameraStep. I'm also going to add three additional properties here I would like to configure. An NSTimeInterval to limit the maximum duration we want to record for and two booleans for allowing the user to review the recording as well as allowing them to retry the recording. Next, we will declare the view controller type to display when the step is dequeued. In the case of an ORKActiveStep there should be a subclass of ORKActiveStepViewController which you can implement similar to any UIViewController in UIKit. The ORKTaskViewController presenting your task is responsible for instantiating the associated view controller of each step in your task.

Here's a quick look at the interface of ORKFrontFacingCamera step view controller which subclasses ORKActiveStepViewController. In our ORKFrontFacingCameraStep we will declare the type of view controller to associate with the step so we can override the stepViewControllerClass method of the superclass. In this case we will return the ORKFrontFacingCameraStepViewController class object. You can use a custom UIView to represent the content of your step. So here our ORKFrontFacingCameraStepContentView is a simple subclass of UIView. I've declared some view events here which we can use to pass relevant events back to our view controller as well as a block typedef. Inside of our interface we have a method to set the block parameter to invoke when events are passed from the contentView. Since we want to give the user a preview of the recording in real time we will pass the AVCaptureSession to the content view and internally the contentView will setup an AV capture video preview wire. And finally we've added a method to start our timer with a maximum duration as well as a method to show certain recording options before submitting. We can now add this contentView into our view controller. We already have a reference to an AVCaptureSession which is initialized in another method. We also have a property to reference our ORKFrontFacingCameraStepContentView. By the time we reach viewDidLoad we are ready to initialize our contentView. Next we will handle events coming from our contentView. We'll use weakSelf here to avoid a reference cycle.

We will add our contentView as a subview. And finally we will set the preview layer session using our AVCaptureSession from before. After our step finishes ORKActiveStepViewController asks for the ORKStepResult. This is your view controller's call to add the appropriate results and any data you collect delegated back to the application when your step finishes. In our case ORKFrontFacingCameraStepResult is going to be a subclass of ORKFileResult. We've also added an integer property so we can keep track of how many times the user deleted and retried their recording. If we revisit the view controller we override the superclass' method result method to append our custom ORKFrontFacingCameraStepResult First we create an instance of our ORKFrontFacingCameraStepResult. Then we set the relevant parameters such as the identifier, contentType, retryCount, as well as the file URL. Finally we append our new result into the current results collection and return. This effectively completes the implementation of our custom active task. Let's jump into Xcode and try it out.

So here I have a demo application that I've been working on which includes ResearchKit as a sub module. So I'm good to go ahead and I'm going to create a method which allows us to construct and present our task. Inside of this method, we're going to instantiate our steps that are part of our task. So I'm going to go ahead and create an instruction step which welcomes the user to the task. Then I'm going to use the ORKFrontFacingCameraStep that we just created. We'll set the maximum recording limit to about fifteen seconds and we'll allow the user to retry and review their recording. Then we'll go ahead and add a completion step thanking the user for their time. So now that we have all of our steps we'll go ahead and create an ORKTaskObject. So here we have an ORKOrderedTask and we'll include all the steps from before.

Then create a taskViewController object injecting our task as well.

Then present this task view controller. In viewDidAppear, we can go ahead and present FrontFacingCameraActiveTask and conform to the delegate the ORKTaskViewController delegate. Then we can make ourselves the delegate for the task view controller. Then respond to the didFinishWith reason ORKTaskViewControllerDelegate method. Inside of this method we're going to check to see if the currently presented view controller is the task controller and then dismiss it. And we'll go ahead and try to extract the ORKFrontFacingCameraStepResult. And once we have that result object we can go ahead and print the recording file URL as well as the retry count. So let's go ahead and run this on the device. Okay, so here we have our instruction step that we created and we're welcome to WWDC. So here we have a preview of your session which we can see are recording in real time. We'll go ahead and click get started. So here we have our front facing camera step and I'll go ahead and create a recording. Hello and welcome to WWDC. So let's go ahead and review this video.

Hello and welcome to WWDC.

Ok, let's go ahead and just retry that because I didn't like that. Hello and welcome to WWDC. I think that one was good. So go ahead and submit. Here's our completion step thanking the user and we'll go ahead and exit the task gracefully.

If we go back to Xcode we should be able to see in the console that we have printed the recording file URL as well as the retry count. This concludes our demonstration for today and we have implemented our own custom active step in ResearchKit and constructed the task in our application. We then extracted the results object to verify our results. We hope you enjoyed. Thank you. Pariece, back to you.

Thank you for that demo Joey. We hope everyone viewing enjoyed it and we're very excited to see what you can do with the new front facing camera step or any task that you decide to create yourself. Now that we collected our front facing camera step sticker that brings us to a close to all of our ResearchKit updates this year. But before moving on let's go over all the stickers we collected throughout our talk. First we talked about community updates where we mentioned a few apps that have leveraged our frameworks over the past year, our new website at researchandcare.org, and the new investigator support program. Then we moved on to onboarding updates. We spoke about the new additions such as body items, in line sensor functionality and the request permission step. Then we talked about survey enhancements where we previewed the new era labels, the "I don't know" button and the ReviewViewController to name a few. Then we talked about hearing test UI updates where we previewed UI enhancements to the environment SPL meter and tone audiometry step. Then we moved on to 3D models where we went over and previewed the 3D model step the USDZ model manager and about digital model manager classes to add 3D models to your app. And last but not least, Joey walked you through the process of building your own active task while also previewing the functionality of the new front facing camera step. We have a pretty solid collection of stickers here but it wouldn't be complete without the final ResearchKit sticker. For more information on the topics discussed today, feel free to visit the resources shared here. As always we want to remind everyone watching that we are open source and we welcome anyone using or interested in ResearchKit to visit our GitHub repo shown here and contribute to help the framework grow. Thank you again for taking the time to watch our talk. And we're looking forward to see the powerful apps and experiences you will create with ResearchKit. Thank you.

-

-

3:24 - instructionStep

let instructionStep = ORKInstructionStep(identifier: "InstructionStepIdentifier") instructionStep.title = "Welcome!" instructionStep.detailText = "Thank you for joining our study. Tap Next to learn more before signing up." instructionStep.image = UIImage(named: "health_blocks")! -

4:08 - informedConsentInstructionStep

let informedConsentInstructionStep = ORKInstructionStep(identifier: "ConsentStepIdentifier") informedConsentInstructionStep.title = "Before You Join" informedConsentInstructionStep.image = UIImage(named: "informed_consent")! let heartBodyItem = ORKBodyItem(text: exampleText, detailText: nil, image: UIImage(systemName: "heart.fill"), learnMoreItem: nil, bodyItemStyle: .image) informedConsentInstructionStep.bodyItems = [heartBodyItem] -

5:04 - webViewStep

let webViewStep = ORKWebViewStep(identifier: String(describing: Identifier.webViewStep), html: exampleHtml) webViewStep.showSignatureAfterContent = true -

7:43 - sesAnswerFormat

let sesAnswerFormat = ORKSESAnswerFormat(topRungText: "Optimal Health", bottomRungText: "Poor Health") let sesFormItem = ORKFormItem(identifier: "sesIdentifier", text: exampleText, answerFormat: sesAnswerFormat) -

8:47 - scaleAnswerFormItem

let scaleAnswerFormat = ORKScaleAnswerFormat(maximumValue: 10, minimumValue: 1, defaultValue: 11, step: 1) scaleAnswerFormat.shouldShowDontKnowButton = true scaleAnswerFormat.customDontKnowButtonText = "Prefer not to answer" let scaleAnswerFormItem = ORKFormItem(identifier: "ScaleAnswerFormItemIdentifier", text: "What is your current pain level?", answerFormat: scaleAnswerFormat) -

9:47 - textAnswerQuestionStep

let textAnswerFormat = ORKAnswerFormat.textAnswerFormat() textAnswerFormat.multipleLines = true textAnswerFormat.maximumLength = 280; textAnswerFormat.hideWordCountLabel = false textAnswerFormat.hideClearButton = false let textAnswerQuestionStep = ORKQuestionStep(identifier: textAnswerIdentifier), title: exampleTitle, question: exampleQuestionText, answer: textAnswerFormat) -

11:00 - ORKReviewViewController

let reviewVC = ORKReviewViewController(task: taskViewController.task, result: taskViewController.result, delegate: self) reviewVC.reviewTitle = "Review your response" reviewVC.text = "Please take a moment to review your responses below. If you need to change any answers just tap the edit button to update your response." -

14:30 - ORK3DModelStep

let usdzModelManager = ORKUSDZModelManager(usdzFileName: "toy_drummer") usdzModelManager.allowsSelection = false usdzModelManager.highlightColor = .yellow usdzModelManager.enableContinueAfterSelection = false usdzModelManager.identifiersOfObjectsToHighlight = arrayOfIdentifiers let threeDimensionalModelStep = ORK3DModelStep(identifier: drummerModelIdentifier, modelManager: usdzModelManager)

-